|

Everyone seems to be writing about zombies lately: Edward Feser (Zombies: A Shopper’s Guide, December 19, 2013), David Gelerntner (The Closing of the Scientific Mind, Commentary, 1/1/2014), Barry Arrington (see here and here), Elizabeth Liddle (see here and here) and Denyse O’Leary. In today’s post, I’m going to throw my hat into the ring.

A few preliminary definitions

What is a zombie, anyway?

First of all, what is a zombie? I’m not talking about the animated corpse that some Haitians believe in, or the creature from the movies. What I’m talking about is what’s known as a philosophical zombie. Professor Edward Feser concisely defines the term as follows:

A “zombie,” in the philosophical sense of the term, is a creature physically and behaviorally identical to a human being but devoid of any sort of mental life.

He goes on to amend his definition by adding that “the notion of a zombie could also cover creatures physically and behaviorally identical to some non-human type of animal but devoid of whatever mental properties that non-human animal has.” However, most philosophers, when they write about zombies, have in mind the human kind.

|

In his insightful article, The Closing of the Scientific Mind, David Gelerntner, Professor of Computer Science at Yale University, describes a thought experiment which illustrates why the idea of a zombie is a philosophically interesting one:

Imagine your best friend. You’ve known him for years, have had a million discussions, arguments, and deep conversations with him; you know his opinions, preferences, habits, and characteristic moods. Is it possible to suppose (just suppose) that he is in fact a zombie?

By zombie, philosophers mean a creature who looks and behaves just like a human being, but happens to be unconscious. He does everything an ordinary person does: walks and talks, eats and sleeps, argues, shouts, drives his car, lies on the beach. But there’s no one home: He (meaning it) is actually a robot with a computer for a brain. On the outside he looks like any human being: This robot’s behavior and appearance are wonderfully sophisticated.

No evidence makes you doubt that your best friend is human, but suppose you did ask him: Are you human? Are you conscious? The robot could be programmed to answer no. But it’s designed to seem human, so more likely its software makes an answer such as, “Of course I’m human, of course I’m conscious!—talk about stupid questions. Are you conscious? Are you human, and not half-monkey? Jerk.”

So that’s a robot zombie. Now imagine a “human” zombie, an organic zombie, a freak of nature: It behaves just like you, just like the robot zombie; it’s made of flesh and blood, but it’s unconscious. Can you imagine such a creature? Its brain would in fact be just like a computer: a complex control system that makes this creature speak and act exactly like a man. But it feels nothing and is conscious of nothing.

Many philosophers (on both sides of the argument about software minds) can indeed imagine such a creature.

Three kinds of zombies: qualia zombies, intentionality zombies and cognitive zombies

Zombies come in at least three different kinds, as Professor Ed Feser explains in his article, Zombies: A Shopper’s Guide.

|

A qualia zombie would be unable to experience the subjective feeling of seeing the color red, even though it could still physically react to red objects in the same way as we do, and even talk about them as we do.

The first kind of zombie, the qualia zombie, has no subjective experiences of any kind, despite its acting just as if it had them, and despite its insisting that its feelings are real:

The kind of zombie usually discussed in recent philosophy of mind is a creature physically and behaviorally identical to a human being but devoid of the qualia [subjective feelings – VJT] characteristic of everyday conscious experience. Call this a qualia zombie. If you kick a qualia zombie in the shins he will scream as if in pain; if a flashbulb goes off in his face he will complain about the resulting afterimage; if you ask him whether he is conscious or instead just a zombie he will answer that of course he is not a zombie and that the whole idea is absurd… But [there is] … no actual feeling of pain associated with his scream, no actual afterimage associated with his complaint, no conscious awareness of any sort associated even with his vigorous protest against the suggestion that he is a zombie.

|

We can consciously perceive a man walking with his dog. But an intentional zombie would have no conscious perception of the man or his dog at all, even though it might say it had them. Image courtesy of Christof Koch and Wikipedia.

Another kind of zombie, the intentional zombie, doesn’t have thoughts, feelings or perceptions which are about anything. It has no mental representations whatsoever of the outside world, or the objects in its environment:

A second kind of zombie, an intentional zombie, would be one devoid of intentionality, i.e. the directedness or “aboutness” of at least some mental states. Your belief that it is sunny outside is about or directed at the state of affairs of its being sunny; your perception of a dog in front of you is about or directed at the dog; and so forth…

Consider a creature physically and behaviorally identical to a normal human being or non-human animal but devoid of intentionality. Call this an intentional zombie. An intentional zombie might utter and write the same sounds and shapes a normal human being would, might give what seemed to be the same gestures, and so forth, but none of this would actually involve the expression of any meanings, since the zombie would entirely lack any meaning, intentionality, or aboutness of any sort.

Unlike an animal such as a dog, which is capable of believing that it is about to be fed when it hears its master opening a can of dog food, wanting to eat the food that is in its bowl, and perceiving its food before it gobbles that food down, an intentional zombie would have no beliefs, wants or even perceptions, whatsoever.

However, an intentional zombie could have certain kinds of subjective feelings, so long as those feelings had no particular object. For instance, it might have a vague sense of fear or melancholy, without being able to say what it was afraid of, or sad about.

|

A cognitive zombie would perceive individual trees, but would lack understanding of the general concept of a tree. Image courtesy of Tomwsulcer and Wikipedia.

A third kind of zombie would have lower-level mental states, including beliefs, desires and perceptions. What it would not have, however, is rational concepts: it would perceive things, but it would not be able to grasp what they are. Feser calls this kind of zombie a cognitive zombie:

Now a cognitive zombie would be a creature physically and behaviorally identical with a human being, but devoid of rationality — that is to say, devoid of concepts and thus devoid of anything like the grasp of propositions or the ability to reason from one proposition to another. It would speak and write in such a way that it seemed to be expressing thoughts and arguments, but there would not be any true cognition underlying this behavior. It would be mere mimicry.

Zombies vs. duplicates

|

Kjell Elvis, the best Elvis imitator in Europe. A duplicate would look just like you, atom for atom, but would lack mental states of one sort or another (subjective feelings, mental representations or concepts). Unlike the philosophical zombie, however, a duplicate might not behave like you; indeed, it might act very differently. Photo: Jarle Vines (Creative Commons Attribution Sharealike 3.0). Image courtesy of GDFL and Wikipedia.

The zombies described above not only look just like us; they also act just like us, despite their lacking mental states of one sort or another (subjective feelings, mental representations or concepts). Perhaps some of my readers find that notion a bit far-fetched (and they’re right, as I’ll argue below). But what about creatures which look just like us, and lack those mental states, but don’t necessarily act just like us? When I say “look just like us,” I’m talking about atom-for-atom duplicates, whose behavior is nevertheless different from ours. For the sake of convenience, I’m going to call such creatures duplicates. So just as philosophers distinguish three kinds of zombies, we can talk about three kinds of duplicates: qualia duplicates, intentional duplicates and cognitive duplicates.

The philosophical questions we need to consider

For each kind of zombie, and each kind of duplicate, there are several philosophically pertinent questions:

(1) Could such a zombie (or duplicate) exist? Is it possible? More specifically:

(a) Is it logically possible? That is, is the description of such a being internally consistent, and free from contradictions like “square circle” or “married bachelor”?

(b) Is it metaphysically (i.e. really) possible? That is, could such a being exist in some world? (Not all logically possible beings are really possible: the descriptions “object with 1.57 dimensions” or “object with -2 dimensions” or “object which is simultaneously read all over and green all over” are free from any internal contradictions, but are nevertheless conceptually incoherent; no such beings could exist in this world or in any other world.)

(c) Is it nomologically (i.e. naturally) possible? That is, is its existence compatible with the laws of our universe?

(d) Is it theologically possible? That is, could God make such a being? (Not all beings which are really possible are theologically possible; for instance, God, being omnibenevolent, could not make an intelligent race of beings that needed to cannibalize their parents in order to survive.)

(2) Would such a zombie (or duplicate) be viable – that is, able to survive and reproduce, over the long term?

(3) Could such a zombie (or duplicate) have come into existence? In other words, is it temporally possible (i.e. possible over the course of time)? More specifically, could Nature have produced such a zombie (or duplicate), and would we expect Nature to have done so?

(4) Does the kind of mental activity the zombie (or duplicate) lacks have any survival value?

For strict Darwinians, questions (3) and (4) are closely related; for evolutionists who argue that neutral (or nearly neutral) changes drive evolution, the answer to (3) could be “Yes,” even though the answer to (4) is “No.”

I mentioned five kinds of possibility above: logical, metaphysical (or real), nomological, theological and temporal. Philosophers also speak of epistemic possibility, which relates to states of affairs which are possible for all we know. Epistemic possibility does not entail real possibility; all it illustrates is the limitations of our knowledge. (Once upon a time, it was epistemically possible that gold could have had an atomic number of 78 instead of 79; but now we know otherwise.) It is also a mistake to equate epistemic possibility with logical possibility; there are states of affairs which are logically possible, but are ruled out by what one knows, and there are states of affairs which are logically impossible, but not obviously so (hence, I may be unaware of their impossibility).

The zombie problem

|

Movie art from Night of the Living Dead (1968). Image courtesy of Wikipedia. (Due to a failure to include a copyright notice on the 1968 print, all images from this film are in the public domain.)

Let’s start with zombies. Professor Gelerntner’s assertion that many philosophers can imagine the existence of zombies establishes their epistemic possibility. Is it logically possible that some being exists which looks and behaves just like a human being (such as me), even though it is completely devoid of (a) subjective feelings (the qualia zombie); (b) mental states which are about something (the intentionality zombie); or (c) concepts, and the ability to reason (the cognitive zombie)? As far as I can tell, there’s no obvious contradiction in the descriptions of each of these zombies, so I’m prepared to grant their logical possibility. But logical possibility is a very thin kind of possibility: as I pointed out above, there are a number of concepts which, while not formally contradictory, are dubiously coherent at best. I gave some examples relating to dimensionality above, such as a 1.57-dimensional object. Alternatively, we might consider a world having not one, but two dimensions of time. The description is logically consistent, but does it really make sense? That seems doubtful, to say the least.

In order to decide whether the existence of a being is really (or metaphysically) possible, we first need to consider its causal powers (which may be either active or passive). Every being has causal powers, or capacities: a magnet has the power to attract iron filings; some acids have the power to dissolve metals; bacteria have the capacity to reproduce; animals (and many other living things) have the capacity to sense certain stimuli; and human beings have the capacity to reason. To deny that beings have causal powers is equivalent to denying that they can either cause anything or be caused to undergo anything. And a being that could neither cause nor be caused would not be a being at all.

When we consider the causal powers of the three kinds of zombies, we can immediately see that a human being would possess certain powers that each of them lacked. A qualia zombie would lack the power to describe what it was feeling, because it wouldn’t have any feelings. An intentionality zombie would lack the power to say what it was thinking about, or what it wanted, because it wouldn’t have any thoughts or wants. And a cognitive zombie would lack the power to reason. So the question we need to address is: could there exist a being which looks and behaves exactly like a human being in all possible situations, even though it lacks some of the causal powers that a human being possesses?

Two important questions about our mental capacities

When we phrase the question this way, it becomes clear that the answer is “No.” But I imagine most of my readers will protest that I have been too quick in reaching this conclusion. Perhaps, they will say, the zombies have other, compensatory powers which we lack, and perhaps they can replicate our behavior perfectly, by exercising their own special powers. So the questions we need to address are: first, can the capacity to feel, the capacity to have thoughts which are about something, and the capacity to reason, be “cashed out” (or exhaustively described) in terms of their behavioral manifestations, and second, if the answer to the first question is “No,” is there another set of capacities whose behavioral manifestations are fully equivalent to the above-mentioned capacities?

The privacy and inaccessibility of mental states

Many people would answer “No” to the first question, arguing that there must be more to each of these capacities than their outward manifestations, since the very descriptions of these capacities contain references to inherently private mental states (feelings, thoughts/wants/perceptions, and concepts, respectively) which cannot be accessed “from the outside.” They would add that while there may be brain processes accompanying these states, no description of a brain process in a human being’s body could ever tell us that that human being was undergoing a subjective experience (e.g. the smell of a rose) or entertaining a thought about something (e.g. tonight’s dinner) or grasping a concept (e.g. the concept of an equilateral triangle).

Neither of these considerations strikes me as being decisive. Granting that certain mental states are inherently subjective and private, it does not follow from this that these mental states are somehow “more than” the gamut of their objective, public manifestations, except in the metaphysical sense that any causal power is more than its manifestations, as it is the metaphysical ground or basis of those manifestations. The question we really need to answer is: can we understand what it is to have these mental capacities, simply by understanding how they are manifested? And I would answer that question by asking another, rhetorical question of my own: how else could you possibly understand what a power or capacity is, other than by understanding the way in which it is exercised or manifested? When you put it like that, it seems rather obvious, doesn’t it?

Nor am I impressed by the argument that looking inside someone’s brain won’t tell you what mental states they are having. As regards qualia and intentional states, we already know that this is not the case (see here and here): there are machines that can read your brain and tell what images you are seeing, and even what words you are currently listening to. (No machine that can read complex thoughts is on the horizon, however.) But even if the argument were true, what does it prove? Nothing, except that our mental powers are fully manifested outside our heads – which, once again, is pretty obvious, when you think about it.

This is not to belittle the privacy of mental states; but as I’ll argue below, what’s irreducibly mental about them is not their privacy as such, but the self, or agent, in whom these states occur. That, I would argue, is what materialism can never explain.

Gelerntner’s leap of logic

Professor David Gelerntner, in his excellent essay, The Closing of the Scientific Mind, cites the Australian philosopher David Chalmers as arguing that consciousness doesn’t “follow logically” from the design of the universe as we know it scientifically – which is perfectly true. For that matter, even the chemical properties of water don’t “follow logically” from our scientific understanding of the properties of hydrogen and oxygen: in other words, they are emergent. What Chalmers’ argument establishes is the falsity of reductionism: the world cannot be understood from the bottom up. Some of its properties can only be known from the top down. All well and good.

But then Gelerntner continues: “Nothing stops us from imagining a universe exactly like ours in every respect except that consciousness does not exist.” Well, we might imagine such a world, but can we really conceive of one? The fact that consciousness doesn’t “follow logically” from the design (or structural properties) of the cosmos does not imply the conclusion that there could be a universe exactly like ours in every respect except that it lacked consciousness. That conclusion would only follow if real (or metaphysical) possibility were equivalent to logical possibility in its scope. But as we’ve seen, there are good philosophical grounds for rejecting the equation of real possibility with logical possibility.

In my discussion of duplicates below, I shall argue that the law-like connections between physical states and conscious mental states in our universe is a powerful reason to believe that there could not be “a universe exactly like ours in every respect except that consciousness does not exist.”

The Identity of Indiscernible Powers

What about the second question? Even supposing that our mental powers could not be fully grasped by grasping their public manifestations, it doesn’t follow that there are other, non-mental capacities which perfectly mimic our mental powers, in terms of their public manifestations. Indeed, it would be very odd if there were two distinct powers whose public manifestations were in every respect identical. One would naturally want to ask: what distinguishes them, then? Are they not the same?

|

Gottfried Wilhelm Leibniz (1646-1716). The portrait was made around 1700 and is kept in the Herzog-Anton-Ulrich-Museum, Braunschweig. Image courtesy of Wikipedia.

The philosophical Gottfried Wilhelm Leibniz (1646-1716) proposed a principle now known as the Identity of Indiscernibles: if two things share all properties, they are identical. What I would propose is that if two powers are the same in their range of effects, then those powers are identical.

My verdict on zombies

So I have to say that I am very skeptical about the real possibility of zombies, of any kind. And if zombies are not really (or metaphysically) possible, then it follows that they are neither nomologically possible (i.e. permitted by the laws of our universe) nor theologically possible, since even God cannot make that which cannot exist in reality. In that case, questions as to whether zombies could have come into existence, or whether Nature could have produced them, simply do not arise.

Are some animals like qualia zombies?

In defense of qualia zombies, it might be urged that the fact that we don’t know for sure which animals have qualia (subjective experiences) and which ones don’t, demonstrates that qualia cannot be cashed out behaviorally. But I wouldn’t be so sure about that. All it really shows is that we haven’t yet identified a scientifically reliable behavioral test for the existence of qualia in animals. There’s a very simple reason for that: we still don’t know what qualia are for. What biological purpose do they serve? We don’t know. There are several theories about this (which I’ll discuss below), but there’s currently no scientific consensus on the subject. Nothing surprising there: until last year, we didn’t even know what sleep is for.

Feser’s argument against qualia zombies and intentional zombies

Professor Edward Feser, in his article on zombies, rejects qualia zombies on the grounds that they reflect a flawed, mechanistic, Cartesian understanding of matter, which strips it of all phenomenal properties, leaving only those properties which can be treated mathematically. Feser argues that no piece of matter can have the substantial form of a dog, without also having a dog’s subjective experiences, or qualia. He adds:

In the same way, given what a dog is, it necessarily going to have states which point to or are directed at things like food, mating opportunities, predators, etc. Hence there is, given the Aristotelian conception of material substance, no such thing as a creature materially and behaviorally identical to a dog yet lacking any “directedness” of any sort.

I’d like to make a couple of observations here. First, what Professor Feser’s argument really shows is that having qualia (or subjective experiences), and having thoughts, desires and perceptions which are about objects, is part of the nature of being a dog. But as I understand it, a zombie dog wouldn’t really be a dog; it would just look and act like one. (Ditto for a zombie human.) So the real question we need to address is: could there be a piece of matter which was atom-for-atom identical with the body of a living dog (or human being) as well as behaviorally identical, but which lacked the substantial form of a dog (or human)? Such a being might be either (a) a mere assemblage of parts, without any real unity, or (b) a unity of a different sort (e.g. a “schmog” instead of a dog). Given that the piece of matter in question is alive – for instance, it has a beating heart, like a dog does – then we would have to say that it was indeed a single entity, and not a mere assemblage. So that rules out (a). What about (b)? Given that we distinguish between the different kinds of living things on the basis of their anatomy and their genomes, the notion that a living thing could be physically identical to a dog, and yet something other than a dog, really makes no sense. So it seems that the answer to our question has to be “No,” after all.

Second, assuming that the answer to the above question is “No,” we still need to ask whether such a possibility might be realized in another universe, with different laws from our own. In a parallel universe, could there be something looking and acting just like a dog, but lacking a dog’s qualia and its intentional mental states? It seems to me that Professor Feser has not provided a definitive answer to this question. While he has established the nomological impossibility of zombie dogs (and by the same token, zombie humans), he has not shown that this impossibility also holds in all metaphysically possible worlds.

Why cognitive zombies would be fatal to Intelligent Design

|

Could a robot have carved Mt. Rushmore? Image courtesy of Dean Franklin and Wikipedia.

But enough of qualia and intentional states. What I’d really like to say is that no Intelligent Design theorist would want to admit the real possibility of cognitive zombies: beings which look and talk and act just like us, but which are utterly incapable of reasoning. Indeed, to concede the possibility of such beings would be fatal to the Intelligent Design program, because it would mean that something that was not a mind would be capable of doing everything that someone with a mind was capable of. And if that were the case, then it would be impossible for us to reliably identify any object found in Nature – say, the faces carved on Mt. Rushmore, or a signal from space containing the first 100 prime numbers – as being the work of a mind, which would mean that the search for patterns in Nature which reliably indicate intelligence would be a futile one. (Actually, I’m amazed that our sharp-minded critics over at the Skeptical Zone haven’t picked up on this point already.)

Now, someone might answer that a robot could be programmed to carve Mt. Rushmore or generate the first 100 primes. To which I would reply: “That’s true, but human beings still have to write the robot’s programs. And even if there were a robot that was capable of writing simple programs for a carefully defined range of tasks (e.g. a program to print a calendar for 2014), someone would still have to write the higher-level program that enabled that robot to write these simple programs. But if there were a robot that was capable of writing the entire range of programs that we (as intelligent beings) are capable of, then we would have to confront the question: how do we know that the Intelligent Designer of Nature is not a robot?

Professor J. R. Lucas, who is a mathematician, has constructed some ingenious arguments (see here) showing that there could never exist a machine that was fully equivalent to a mind, in its behavioral capacities. Professor Lucas argues that while a machine can (in theory) simulate any particular piece of mind-like behavior, no machine could possibly exist that can simulate every piece of behavior that an intelligent creature with a mind is capable of. In short: there never will be a machine that can do everything that an intelligent being can do.

I was very heartened to see that Denyse O’Leary, in her post on zombies (But can nature create human consciousness at all?, January 4, 2014), chose to focus specifically on human consciousness:

There is no reason — apart from a faith belief in naturalism — to believe that nature, which is unconscious, can produce human consciousness at all. Whether such consciousness has survival value or not. The best nature can do is produce animal consciousness (awareness of and response to one’s surroundings and experience). The rest of it — the abstractions, the ethical wrestles, the aesthetic quarrels, the tussle with mortality — does not seem to be part of nature’s remit.

“[T]he abstractions, the ethical wrestles, the aesthetic quarrels, the tussle with mortality”: we should never expect to see a machine exhibiting these features of human behavior. Wise words indeed.

Does consciousness have survival value?

|

One biological purpose proposed for consciousness is to enable an humans (and perhaps other animals) to engage in planning. Image courtesy of Wikipedia.

I’d now like to say something about the survival value of consciousness. There is a substantial body of research indicating that in human beings, at least, consciousness does have a practical purpose. I’d like to quote from E. J. Masicampo and Roy Baumeister’s recent article, Conscious thought does not guide moment-to-moment actions—it serves social and cultural functions (Frontiers in Psychology, 26 July 2013, doi: 10.3389/fpsyg.2013.00478):

(Abstract)

Humans enjoy a private, mental life that is richer and more vivid than that of any other animal. Yet as central as the conscious experience is to human life, numerous disciplines have long struggled to explain it. The present paper reviews the latest theories and evidence from psychology that addresses what conscious thought is and how it affects human behavior. We suggest that conscious thought adapts human behavior to life in complex society and culture. First, we review research challenging the common notion that conscious thought directly guides and controls action. Second, we present an alternative view — that conscious thought processes actions and events that are typically removed from the here and now, and that it indirectly shapes action to favor culturally adaptive responses. Third, we summarize recent empirical work on conscious thought, which generally supports this alternative view. We see conscious thought as the place where the unconscious mind assembles ideas so as to reach new conclusions about how best to behave, or what outcomes to pursue or avoid. Rather than directly controlling action, conscious thought provides the input from these kinds of mental simulations to the executive. Conscious thought offers insights about the past and future, socially shared information, and cultural rules. Without it, the complex forms of social and cultural coordination that define human life would not be possible.

In their article, Do Conscious Thoughts Cause Behavior? (Annual Review of Psychology, 2011, 62:331–61), authors Roy F. Baumeister, E. J. Masicampo, and Kathleen D. Vohs go into more detail about the role played by consciousness in human behavior:

We review studies with random assignment to experimental manipulations of conscious thought and behavioral dependent measures. Topics include mental practice and simulation, anticipation, planning, reflection and rehearsal, reasoning, counterproductive effects, perspective taking, self-affirmation, framing, communication, and overriding automatic responses. The evidence for conscious causation of behavior is profound, extensive, adaptive, multifaceted, and empirically strong. However, conscious causation is often indirect and delayed, and it depends on interplay with unconscious processes. Consciousness seems especially useful for enabling behavior to be shaped by nonpresent factors and by social and cultural information, as well as for dealing with multiple competing options or impulses. It is plausible that almost every human behavior comes from a mixture of conscious and unconscious processing.

It seems prudent to conclude, then, that the biological usefulness of consciousness in human behavior is a scientifically established fact. Quite clearly, consciousness does aid human survival.

Consciousness as a monitor: the biological function of consciousness in non-human animals?

|

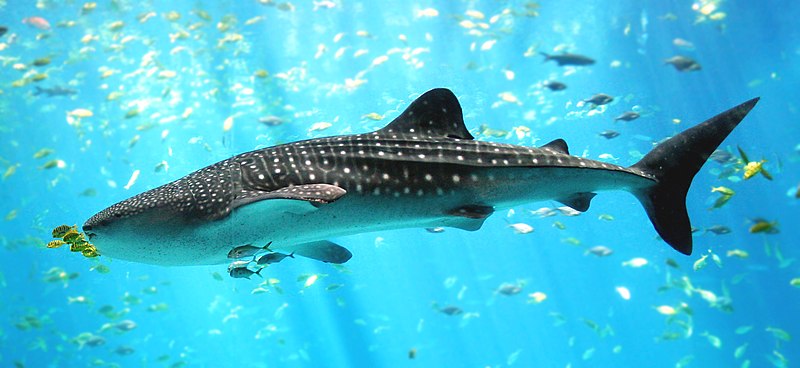

Fish such as the whale shark have no need for consciousness. Image courtesy of Zac Wolf, Stefan and Wikipedia.

What about consciousness in other animals? Professor James D. Rose, in his article, The Neurobehavioral Nature of Fishes (Reviews in Fisheries Science, 10(1): 1–38, 2002), invokes a computer analogy to explain why fish don’t need it, and then speculates as to what purpose it might serve in higher animals, such as human beings:

…[F]or organisms such as fishes, which have no neocortex at all, it seems entirely logical that none of their brain activity could be consciously experienced. Although computer analogies with brain function are often misleading, a simple example may help to communicate this argument. Consciousness functions as a monitor that gives us awareness of some but not all operations that are occurring in our brain. In our consciousness, most of the activity in the spinal cord, brainstem, and some parts of the cortex is not displayed on the monitor of our consciousness, so we are unaware of it despite its effective functioning. In animals without the consciousness monitor provided by the neocortex, brain and spinal cord activity function effectively, as they do in our subcortical systems, without any means for reaching awareness or any need for it, just as programs do in a computer with the monitor off…

In contrast to the predictable, species-typical behavior of fishes, the immense diversity of human solutions to problems of existence through novel, mentally contrived strategies, is unparalleled by any other form of life. The full range of this behavioral diversity and flexibility depends on the capacity for consciousness afforded by the human neocortex (Kolb and Whishaw, 1995; Donald, 1991).

Another computer analogy is instructive here. Those of us who used desktop computers throughout their development during recent decades have seen greatly increased capabilities of these devices emerge with absolute dependence on changes in hardware and software. It is inconceivable that one could run Windows™, read and write to compact disks, and search the Internet, all at high speed and in high-resolution color, with a 1982 desktop computer that had minimal memory, no hard disk drive, and a monochrome monitor. The massive additional neurological hardware and software of the human cerebral cortex is necessary for the conscious dimension of our existence, including pain experience. However, as in fishes, our brainstem-spinal systems are adequate for generation of overt reactions. To propose that fishes have conscious awareness of pain with vastly simpler cerebral hemispheres amounts to saying that the operations performed by the modern computer could also be done by the 1982 model without additional hardware and software.

Thus, fishes have nervous systems that mediate effective escape and avoidance responses to noxious stimuli, but, these responses must occur without a concurrent, human-like awareness of pain, suffering or distress, which depend on separately evolved neocortex. Even among mammals there is an enormous range of cerebral cortex complexity. It seems likely that the character of pain, when it exists, would differ between mammalian species, a point that has been made previously by pain investigators (Melzack and Dennis, 1980; Bermond, 1997).

Thus according to Professor Rose, the biological purpose of consciousness in mammals is to enable them to monitor their own behavior, especially when they are placed in novel situations which demand a sophisticated and flexible response. At the same time, Rose rightly cautions against over-reliance on computer metaphors for consciousness.

In his essay, The Closing of the Scientific Mind, David Gelerntner points to several significant disanalogies between minds and computers: for instance, you can’t transfer a mind from one brain to another but you can transfer software from one computer to another; minds are opaque (you can’t know what I’m thinking unless I tell you), while software is transparent; and minds cannot be programmed to run in any way we choose, whereas computers can. Nevertheless, this does not mean that computers cannot be profitably studied in order to shed light on how consciousness might arise, and what functions it might serve.

In his 2008 essay, Functions of consciousness, Dr. Anil Seth, Professor of cognitive and computational neuroscience at the University of Sussex, draws together the latest scientific thinking on the biological role of consciousness in animals possessing it. He proposes that consciousness integrates information, and that it does so by ruling out an enormous number of alternative possibilities and thereby generating an incredibly large amount of information:

A recurring idea in theories of consciousness is that consciousness serves to integrate otherwise independent neural and cognitive processes. This ‘integration consensus’, which can be traced back at least to (Sherrington 1906), has been expressed most forcefully in the cognitive context by Baars’ ‘global workspace’ theory (Baars 1988; Baars 2002) and in the neurophysiological context by Edelman (Edelman 1989; Edelman 2003; Edelman & Tononi 2000) and Tononi (Tononi 2004; Tononi & Edelman 1998). Most participants within the integration consensus see consciousness as a “supremely functional adaptation” (Baars 1988), particularly with respect to enabling flexible, context-dependent behavior.…

According to the integration consensus, consciousness functions to bring together diverse signals in the service of enhanced behavioral flexibility and discriminatory capacity. Theoretical proposals within this consensus are among the most highly developed and are increasingly open to experimental testing. However, integration theories must explain why consciousness is necessary since many integrative functions seem plausibly executable by unconscious devices.

Seth’s highly readable essay, Consciousness: Eight questions science must answer (The Guardian, 1 March 2012) is also well worth perusing, especially for non-specialist readers.

The philosopher Peter Carruthers has proposed another hypothesis regarding the biological function of consciousness: he suggests that it allows an animal to distinguish between appearance and reality. Such an ability would enable it to realize that its perceptions were false (e.g. that the oasis it sees in the distance is a mirage) and cease engaging in behavior that was fruitless, turning instead to something likely to be more beneficial to it. Consciousness could also make it easier for an animal to manipulate other individuals for either cooperative or selfish ends, by imagining how things appear to them (which presupposes that the animal in question has a theory of mind). Because Carruthers holds to a higher-order account of consciousness, he personally believes that very few, if any, non-human animals are conscious.

The hypotheses proposed on the biological role of animal consciousness are tentative, and much research remains to be done. Nevertheless, they serve to rebut the charge that consciousness has no biological function: at the present time, there are several plausible functions that it might turn out to have.

Marian Dawkins on the biological function of pain

But, some may ask, what about the assertion made recently by Professor Marian Stamp Dawkins, Professor of Animal Behavior and Mary Snow Fellow in Biological Sciences, Somerville College, Oxford University, in her latest book, Why Animals Matter (Oxford University Press, 2012), that “everything that animals do when they make choices or show preferences or even ‘work’ to get what they want could be done without conscious experience at all”? What Marian Dawkins is saying here is that as far as we know, the entire gamut of animal behavior could be replicated by organisms that were not conscious – so we can’t be sure whether other animals really are conscious or not. But the uncertainty here is epistemic, not metaphysical. Professor Marian Dawkins is not suggesting that consciousness has no biological purpose; rather, what she is saying is that we haven’t yet identified the biological purpose of consciousness in non-human animals – which is entirely true, at the present time.

Richard Dawkins confuses pain with nociception

Professor Richard Dawkins, on the other hand, does not appear to be as familiar with the literature on pain as he should be. In a 2011 article titled, But can they suffer?”, he poses the question of “what, in the Darwinian sense, pain is for,” and answers that “It is a warning not to repeat actions that tend to cause bodily harm.” On the contrary: this is the biological purpose served by nociception (a tendency to react to noxious stimuli), not pain. Virtually all animals (with the exception of sponges and, interestingly, sharks) exhibit nociception, but the number of animal species thought to be capable of feeling pain is far smaller: Professor Rose, for instance, considers only mammals to possess this capacity (and I would add birds, in light of the fact that we now know that they possess a homologue of the mammalian neocortex). Pain, as Rose insists again and again in his 2002 article, The Neurobehavioral Nature of Fishes, requires the existence of brain structures possessing a high degree of interconnectivity and synchrony:

The reasons why neocortex is critical for consciousness have not been resolved fully, but the matter is under active investigation. It is becoming clear that the existence of consciousness requires widely distributed brain activity that is simultaneously diverse, temporally coordinated, and of high informational complexity (Edelman and Tononi, 1999; Iacoboni, 2000; Koch and Crick, 1999; 2000; Libet, 1999). Human neocortex satisfies these functional criteria because of its unique structural features: (1) exceptionally high interconnectivity within the neocortex and between the cortex and thalamus and (2) enough mass and local functional diversification to permit regionally specialized, differentiated activity patterns (Edelman and Tononi, 1999). These structural and functional features are not present in subcortical regions of the brain, which is probably the main reason that activity confined to subcortical brain systems can’t support consciousness. Diverse, converging lines of evidence have shown that consciousness is a product of an activated state in a broad, distributed expanse of neocortex. Most critical are regions of “association” or homotypical cortex (Laureys et al., 1999, 2000a-c; Mountcastle, 1998), which are not specialized for sensory or motor function and which comprise the vast majority of human neocortex. In fact, activity confined to regions of sensory (heterotypical) cortex is inadequate for consciousness (Koch and Crick, 2000; Lamme and Roelfsema, 2000; Laureys et al., 2000a,b; Libet, 1997; Rees et al., 2000). (PDF, p. 6)

In his article, Professor Richard Dawkins briefly touches on nociception, only to reject it. Dawkins considers the possibility that an animal might have a “little red flag,” that could warn it to shun noxious stimuli, without its having to consciously feel pain. However, Dawkins dismisses the possibility, on the grounds that the disincentive wouldn’t be strong enough if the animal didn’t actually feel the harm inflicted by the stimulus. Such an animal, suggests Dawkins, would “need to be whipped agonizingly into line” by the feeling of pain, in order to guarantee that it would avoid damaging stimuli in the future.

|

A model of the capsaicin receptor, a protein which, in humans, is encoded by the TRPV1 gene. The receptor alerts animals to the presence of a noxious, scalding hot stimulus – a striking example of nociception in animals.

Dawkins goes on to suggest that “unintelligent species might need a massive wallop of pain, to drive home a lesson that we [i.e. clever human beings – VJT] can learn with less powerful inducement.”

I have to say that this proposal of Dawkins’ is comically mistaken. To whip an animal into line (to use Dawkins’ phrase), what is needed is not the conscious feeling of pain, but exquisitely sensitive nociceptors, which detect noxious stimuli at some distance and cause the animal to run away immediately.

Zombies: would Nature have done it that way?

Barry Arrington, in his article, Nature Wouldn’t Have Done It That Way, January 3, 2014), argues that “[i]f a conscious person and a zombie behave exactly alike, consciousness does not confer a survival advantage on the conscious person,” which means that “consciousness cannot be accounted for as the product of natural selection.” He concludes that “Nature would not have done it that way.”

As we have seen, however, the notion of a zombie – be it a qualia zombie, an intentional zombie or a cognitive zombie – is philosophically incoherent. What’s more, there are several plausible hypotheses regarding the biological function of consciousness in sentient animals, and there’s also an abundant body of research pointing to its usefulness in human beings.

In a vigorously worded rebuttal, Dr. Elizabeth Liddle points out that by definition, a zombie that was capable of behaving just like a conscious person would have to not only react to stimuli in its environment but also exhibit a vast range of capabilities, including “anticipation, choice of strategy, weighing up immediate versus distant goals and deciding on a course of action that would best bring about the chosen goal, being able to anticipate another person’s wishes, and regard fulfilling them as a goal worth pursuing (i.e. worth spending energy on), being able to anticipate another person’s needs, and regard alleviating that person’s needs [as worth satisfying].” She argues that any entity capable of doing all that would surely be conscious. “What is consciousness, if not these very capacities?” she asks.

|

Three bottles of eau de vie (“water of life”), a colorless fruit brandy. Cropped version of original photo taken by Flickr user Charles Haynes. Source: Charles Haynes’s Flickr page: http://www.flickr.com/photos/haynes/291182294/ Image courtesy of Wikipedia.

I have to side with Dr. Liddle here. Subjective experiences do not simply cause us to behave in certain ways; they also cause us to talk in certain ways. Among the linguistic behavior that characterizes consciousness is the ability to describe what one is experiencing, using similes and metaphors. For example, we might say that the color scarlet is to the senses what the trumpet is to the orchestra, or we might say that orange is a bold, happy color. The French refer to a colorless fruit brandy as the water of life (eau de vie).

Or consider Robert Burns’ 1794 poem, A Red, Red Rose, which begins as follows:

O my Luve’s like a red, red rose,

That’s newly sprung in June:

O my Luve’s like the melodie,

That’s sweetly play’d in tune.

Could an unconscious zombie come up with a poem like that? I think not. Like Dr. Liddle, I find the notion that a mindless entity could replicate the full range of behavior characteristic of conscious beings, utterly incredible.

Dr. Liddle continues:

And if consciousness is these very capacities, then why should they not evolve? They are certainly likely to promote successful survival and reproduction.

Here, I am afraid, I would have to differ with her. There are many capabilities which might aid survival, but as any Darwinian will tell you, if there is no viable pathway whereby an organism lacking those abilities can evolve into a creature possessing them, then they will never emerge. I’ll say more below about why I think the evolution of conscious life, even at an animal level, would be extremely unlikely. For the time being, I shall content myself with pointing out that the vast majority of animal species get by perfectly well without consciousness, and that even if we live in a world where there is a step-by-step sequence of changes leading to the emergence of consciousness, that is still a contingent fact about the world, which cries out for an explanation.

Barry Arrington, in a counter-rebuttal to Dr. Liddle, argues that a zombie’s physical outward actions could be programmed to be the same as a that of a conscious person such as himself, and gives the example of a computer which is programmed to print out the sentence, “Oh, what a beautiful sunset,” when it detects the sun setting in the western sky, at the precise same moment that he, witnessing the beauty of the sunset, utters the same sentence. But as Dr. Liddle points out in a follow-up post, “the computer is not behaving like Barry.” All we can say here is that “[t]here is a tiny overlap in behaviour – both are outputting an English sentence that conveys the semantic information that the sunset is beautiful.” In every other respect, Barry’s reaction to the sunset is completely different from the computer’s.

So what would a computer need to have, in order to display the same reaction to the sunset as Barry? In her follow-up post, Dr. Liddle observes that “[i]n classic Philosophical Zombie thought experiments, the two Freds are physically identical, right down to the last ion channel in the last neuron.” It therefore follows that a zombie displaying the same reactions to the color red as a conscious person would need the same causal mechanisms operating in its body: “all the mechanisms that generate that explicit and implicit knowledge in response to a red stimulus would have to be present in Zombie Fred for Zombie Fred to react to red as Fred does.”

|

Red is a color we associate with blood. Blood samples, right: freshly drawn; left: treated with EDTA (anticoagulant). Image courtesy of J. Heuser and Wikipedia.

Dr. Liddle argues that what it means to experience a quale such as the color red is intimately bound up with our affective responses to the color:

…I suggest that the “quale” of red consists not only of all the explicit knowledge I listed first, it also includes implicit knowledge of what I feel like when we see red things, gained from partly from my life experience, but partly, I suspect, bequeathed to me by evolution in the genes that constructed my infant brain.

And in the case of red, specifically, I suggest it is a slight elevation of the sympathetic nervous system, resulting from both learned and hard-wired links between things that are red, and which have in common both danger and excitement – fire and blood being the most primary, edible fruit probably as a co-evolutionary outcome, and fire engines, warning lights, stop lights, etc as learned associations.

|

The philosopher and clergyman William Paley considered the pleasant taste of food to be a powerful argument for the existence of a Deity. Image courtesy of the National Cancer Institute and Wikipedia.

Which brings us back to the question: would Nature have done it that way? I would argue that Barry Arrington is right in arguing that Nature wouldn’t have done so, but I would disagree with his implied assumption that the conscious feelings accompanying our behavior are something added onto the causal mechanisms which produce that behavior, which might suggest that they were put there by a benevolent God Who wanted us to experience the pleasure of having them. William Paley put forward a similar argument in his Natural Theology, when marveling at the pleasure we experience from the mere act of eating:

A single instance will make all this clear. Assuming the necessity of food for the support of animal life; it is requisite, that the animal be provided with organs, fitted for the procuring, receiving, and digesting of its food. It may be also necessary, that the animal be impelled by its sensations to exert its organs. But the pain of hunger would do all this. Why add pleasure to the act of eating; sweetness and relish to food?

(Natural Theology: or, Evidences of the Existence and Attributes of the Deity. 12th edition, 1809. London. Chapter 26, pp. 483-484)

Were it the case that conscious feelings are added onto our physical and behavioral dispositions, then it would not only be mysterious why Nature put them there; it would also be a puzzle why God added those feelings, for as St. Thomas Aquinas was fond of saying, “Nothing is void in God’s works” (Summa Theologica I, q. 98, article 2), and “God and nature make nothing in vain” (Summa Theologica III, q. 9, art 4).

Nevertheless, I am awestruck, like Barry and like William Paley, at the unexpected beauty of the natural world. Indeed, the mere fact of being able to see anything at all strikes me as nothing less than a continual miracle – “the vision splendid,” as I like to call it. And my wonder is in no way diminished by the fact that my eyes are not as sharp as they used to be. I can still see stars and sunsets, blue skies and verdant hills, and I still wonder why I am lucky enough to be able to see them.

Why Nature, left to itself, wouldn’t have given rise to consciousness

Wonder is all well and good, but we need to be able to properly identify the grounds for this wonder, before we can make an argument from it. I would suggest that there are two grounds.

First, as I mentioned above, the fact that consciousness has survival value doesn’t mean that it could have evolved – in other words, survival value, in and of itself, doesn’t guarantee that consciousness is temporally possible. On the contrary, all the data suggest that even the emergence of life, let alone conscious life, was a fantastically improbable event, from a purely natural perspective. Dr. Stephen Meyer, in his recent book, Darwin’s Doubt: The Explosive Origin of Animal Life and the Case for Intelligent Design, has argued that the emergence of 30 animal phyla within a relatively brief geological timespan (5 to 20 million years, depending on how you define the Cambrian explosion) is a fact that naturalistic theories of unguided evolution are unable to account for, in view of the vast amounts of information required to produce these animals’ developmental regulatory networks (indeed, Meyer argues that even billions of years wouldn’t be enough time).

When we consider the further fact that conscious animals are confined to two classes (mammals and birds) of one animal phylum (Chordates, which includes all vertebrates), comprising a mere 15,000, or 0.2%, of the estimated 7,700,000 species of animals estimated to inhabit this planet (that’s right, 99.8% of all species of animals get along just fine without consciousness), we can truly appreciate that the emergence of conscious beings on Earth was anything but a foregone conclusion. We cannot thank “Mother Nature” for this fortunate state of affairs; instead, we should thank the Intelligent Author of Nature.

The second source of wonder is the fact that we live in a world in which consciousness – and in particular, conscious pleasure – is possible. Granting (for argument’s sake) that our conscious feelings of pleasure are simply an automatic consequence of the processes going on in our brains when we are presented with certain kinds of stimuli (such as food), it is still an amazing fact that there are any configurations of matter in our universe that are capable of giving rise to pleasurable feelings at all. It is here, I would suggest, that Paley’s feeling of wonder is rightly placed. The existence of a cosmos in which pleasure is nomologically possible – that is, where it happens in a reliable, law-like fashion when the physical conditions are right – is surely a contingent fact, and we have every right to marvel at that fact, and ask: “Why is it so?”

Finally, the fact that we can reflect on our experience of pleasure, and understand what it is and where it springs from, is an even more astonishing fact, and one which we would not expect in a universe whose creatures were adapted simply for survival. Our ability to enjoy the colors of autumn is one thing; our ability to write an Ode to Autumn is quite another – and one we should expect only in a universe designed by a benevolent Creator.

Some brief thoughts on the argument from suffering

The Argument from Suffering, which is commonly put forward against the existence of God, has a great deal of emotional force to it; but the existence of beauty in every nook and cranny of the cosmos is the one fact that overcomes it, on the emotional level. Hideous though it is, evil is local; beauty, on the other hand, is found in every faucet of Nature. It is the subjective (but shareable) experience of beauty in the cosmos, more than anything else, which persuades me that the world we live in is a fundamentally good one.

Conscious reflection is incompatible with materialism

I would add that conscious reflection is an activity that cannot, even in principle, be explained in materialistic terms. In this respect, it is much more of a puzzle for materialism than qualia. The philosopher Thomas Nagel, in his paper, What is it like to be a bat? (The Philosophical Review LXXXIII, 4 October 1974, pp. 435-50), cautions that while qualia invalidate reductionism, they in no way disprove physicalism:

We appear to be faced with a general difficulty about psychophysical reduction….

What moral should be drawn from these reflections, and what should be done next? It would be a mistake to conclude that physicalism must be false.… It would be truer to say that physicalism is a position we cannot understand because we do not at present have any conception of how it might be true.

But conscious reflection is of an altogether different character. When we reflect, our thoughts have meaning all of their own. And semantic meaning is not a property that can be attributed to material processes as such: in and of themselves, they have no meaning whatsoever. What we have here, then, is an unbridgeable gulf between the character of the mental and the physical. As Professor Edward Feser put it in a recent blog post (September 2008):

Now the puzzle intentionality poses for materialism can be summarized this way: Brain processes, like ink marks, sound waves, the motion of water molecules, electrical current, and any other physical phenomenon you can think of, seem clearly devoid of any inherent meaning. By themselves they are simply meaningless patterns of electrochemical activity. Yet our thoughts do have inherent meaning – that’s how they are able to impart it to otherwise meaningless ink marks, sound waves, etc. In that case, though, it seems that our thoughts cannot possibly be identified with any physical processes in the brain. In short: Thoughts and the like possess inherent meaning or intentionality; brain processes, like ink marks, sound waves, and the like, are utterly devoid of any inherent meaning or intentionality; so thoughts and the like cannot possibly be identified with brain processes.

Drawing upon the work of the philosopher James Ross, Feser goes on to argue that our fine-grained concepts are determinate in a way in which no physical representation of them could ever be. In a highly accessible post, Feser sums up Ross’s argument in a nutshell: “The point is that an abstract concept could not, even in theory, be material, given that concepts are determinate and material things are indeterminate.” For readers who are interested, Ross’s original paper is titled, Immaterial Aspects of Thought (The Journal of Philosophy, Vol. 89, No. 3, (Mar. 1992), pp. 136-150. In this article, Professor Ross argues that thought is immaterial because it has a definite, determinate form. As he puts it: by its nature, thinking is always of a definite form – e.g. right now, I am performing the formal operation of squaring a number. But no physical process or sequence of processes, or even a function among physical processes, can be definite enough to realize (or “pick out”) just one, uniquely, among incompossible forms. For example, when I perform the mental operation of squaring, there is nothing which makes my accompanying neural processes equivalent to the operation of squaring and not some other mental operation. Thus, no such process can be such thinking. On the last page, in a footnote, Ross makes a similar argument for the propositional content of thought: when I think that it is going to rain tomorrow, there is nothing in my brain which unambiguously corresponds to this thought, as opposed to some other thought. Ross is responds to the objection that computers can add and perform other abstract operations: “Machines do not process numbers (though we do); they process representations (signals). Since addition is a process applicable only to numbers, machines do not add. And so on for statements, musical themes, novels, plays, and arguments.” A more up-to-date, expanded version of Ross’s arguments can be found here.

|

The idea of an ‘internal viewer’ inside our heads is said to generate an infinite regress of internal viewers, according to one popular argument against the existence of a disembodied “self.” However, the infinite regress argument doesn’t work against the hypothesis of a unified self which is capable of both embodied and disembodied acts. Image courtesy of Jennifer Garcia and Wikipedia.

The concept of the self is no less resistant to explanation in material terms. Some philosophers picture the self as a kind of confabulation created by the brain: a fictitious but useful notion that it creates in order to make sense of mental phenomena such as simultaneous multi-sensory experiences, biological feedback and feed-forward, and episodic memories. “There is no man inside your head,” we are constantly told. “That’s the homunculus fallacy.”

The first thing that needs to be said here is that the notion of a confabulation is altogether unhelpful in explaining how we come to believe that each of us has a “self.” For the activity of “confabulating” is itself an attempt to find meaning in the “blooming, buzzing confusion” that constitutes our first impression of the world, as babies (William James, The Principles of Psychology, Cambridge, MA: Harvard University Press, 1981, p. 462. Originally published in 1890.) But as we have seen, meaning cannot be attributed to material processes, as such. Hence the notion that the brain invents the concept of the self in order to make meaning of its impressions of the world, doesn’t even get off the ground.

The second thing that needs to be remarked on here is that the so-called homunculus fallacy is a straw man. According to standard textbook accounts, the notion that someone inside our brain looks at the images formed on our retinas as if they were images on a movie screen invites the further question of how this “someone” manages to “see” its internal movie. Is there another homunculus inside the first homunculus’s “brain” looking at this movie – and if there is, wouldn’t that generate an infinite regress of homunculi? But it is not at all clear why we need to ask the question of how the homunculus sees in the first place. Such a question would only arise if we pictured the self in Cartesian terms as wholly separate from the body. What I am arguing here instead is that the self is an integrated and unified entity, which is capable of both bodily and non-bodily activities (reasoning, understanding and making choices being among the latter). Because the self is embodied, it is no mystery at all that events in the outside world make an impression on the self, when they impinge on its nervous system and when the information gets relayed to its brain.

On the possibility of duplicates

Having disposed of the zombie problem, it remains to address the question of whether duplicates are possible: beings that look just like us but lack one aspect or another of consciousness: subjective feelings, intentional states (beliefs/desires/perceptions), or concepts. Unlike philosophical zombies, duplicates may behave in ways strikingly different from normal human beings: only their bodies are the same.

Are duplicates really (i.e. metaphysically) possible? I would argue that qualia duplicates and intentional duplicates are not really possible, and that while cognitive duplicates are really (i.e. metaphysically) possible, they would not be a viable life-form; hence they are theologically impossible.

Let’s discuss qualia and intentional duplicates first, as the mental powers which they lack are not unique to human beings, but are possessed by some non-human animals as well. In his article, The Closing of the Scientific Mind, Professor David Gelerntner writes: “Nothing stops us from imagining a universe exactly like ours in every respect except that consciousness does not exist.” I would argue that for the lower kinds of consciousness, this is false: indeed, I maintain that there could not even be a universe with creatures looking exactly like us, but lacking qualia, or for that matter, lacking the kinds of beliefs, desires and perceptions that a dog or a chimpanzee (say) might have. In other words, not only zombies, but also qualia duplicates and intentional duplicates are not really possible.

|

Cross-section and full view of a hothouse (greenhouse-grown) tomato. Image courtesy of fir0002 (flagstaffotos.com.au) and Wikipedia.

My reason for holding this view is the existence of law-like connections between physical events and lower-order mental events. For instance, if I’m looking at a ripe tomato under normal viewing conditions, and if my eyes are functioning properly, I will see the color red. I will subjectively experience that color as a quale. And if my brain is functioning properly, then I will perceive that the object before me is a tomato, and (on the basis of my previous pleasant experiences of eating ripe tomatoes), I will automatically come to believe that the tomato in front of me is good to eat, and desire to eat it. These events happen automatically, and while psychologists have not yet formulated laws (like those in physics and chemistry) describing how these events happen, their very regularity (assuming that we live in a rational universe) constitutes strong evidence that there are indeed law-like (i.e. nomological) links between the different kinds of physical and mental events that occur when I see a tomato.

Thus it is nomologically impossible, or against the laws of our universe, for a creature having a functioning body and brain identical to mine, to see a tomato and not experience any qualia or beliefs, desires and perceptions. But might it not be really possible for such a creature to exist, in some alternative universe, with different laws from our own? Here again, the answer must be no.

The reasoning here gets more subtle. We have to ask ourselves: does it make sense to speak of a creature having a functioning body and brain identical to ours, but subject to different laws of Nature? The answer, I would suggest, is surely no: if the laws of Nature in that creature’s alternative universe were different, then the movements of its constituent particles would also be different – in which case, the creatures’ bodies would be different. Hence it only makes sense to suppose that a duplicate might exist in a universe with the same laws as ours. This means that if qualia duplicates and intentional duplicates are nomologically impossible (as I have argued above), then they are really impossible as well.

I stated above that the qualia, beliefs, desires and perceptions occurring in qualia duplicates and intentional duplicates are mental events which are triggered automatically. But the same cannot be said for our acts of reasoning about tomatoes. You cannot make me formulate a syllogism in my head by stimulating my brain with electricity, and you cannot make me choose to eat that tomato in a way that requires the use of reason (e.g. slicing it up into equal portions, for each member of my family) by stimulating my brain with electricity either. (N.B. I’m aware that by stimulating someone’s brain in a certain way, you can make them feel an overpowering desire to eat a tomato, but when you ask them why they ate it, they confabulate: they make up a reason, because they know they haven’t got a reason for what they did. I wouldn’t call that an act of will: would you?) The intellect’s act of reasoning and the will’s act of rational choice are not events that appear to occur in an automatic, law-like fashion: as far as we can tell, they are free. The same goes for the process whereby I try to discover what it is, precisely, that makes a tomato a tomato, and thereby arrive at a rational concept of what it is.

Cognitive duplicates: really possible, but temporally and theologically impossible

Which brings us to cognitive duplicates. Associate Professor Kenneth Kemp, in a widely discussed article on Adam and Eve, entitled, Science, Theology and Monogenesis (American Catholic Philosophical Quarterly, 2011, Vol. 85, No. 2, pp. 217-236), maintained that cognitive duplicates were not only possible, but actual beings who existed at some time in the past, around the dawn of humanity. Because these beings were mentally like us in all respects except that they lacked reason and free will, I’m going to call them human beasts.

Professor Kemp contended in his article that there are no less than three distinct concepts of humanity: biological (belonging to the human species, genetically speaking: i.e. being able to readily inter-breed with people), philosophical (having an ability to reason) and theological (having the opportunity to be in a state of eternal friendship with God). The third is a subset of the second, which is a subset of the first; hence every rational human being is a genetically human animal, but not vice versa. Professor Kemp maintains that Adam and Eve had “biologically human ancestors” (p. 232), who belonged to a “biologically (i.e., genetically) human species” (p. 231), so it seems that he must therefore hold that these pre-Adamite hominids had human bodies; yet he also says that these hominids lacked rational souls – which would seem to mean that for Kemp, the rational soul is not essentially the form of the human body, as the ecumenical Council of Vienne decreed it was, in 1311. I further discuss the philosophical deficiencies in Kemp’s view in my earlier post, Adam, Eve and the Concept of Humanity: A Response to Professor Kemp.

For my part, I recognize only one concept of humanity, and I would argue that of theological necessity, anything which is biologically human must also possess a rational nature (and hence, a spiritual soul). Thus I deny the theological possibility of human beasts. I also deny the temporal possibility of such creatures ever coming into existence. But because having a body does not entail being able to reason in a lawlike fashion, I would acknowledge that human beasts are nomologically (and hence really) possible.

My argument proceeds as follows. The really odd thing about human beings is that they are animals with two non-bodily capacities (the capacity to reason coupled with the concomitant capacity to make free choices), in addition to their bodily capacities. How, the reader may be wondering, can there be a kind of animal which, by its very nature as an organism, should possess non-bodily capacities, in addition to its bodily capacities? The answer is that if the body’s physical powers alone are insufficient to make an organism suitable for its biological role or niche, then it must rely on additional, non-bodily powers in order to fit into that role. These powers, I would argue, can only come from a spiritual soul infused by God. It needs to be kept in mind that this soul is not a ghostly thing, separate from the body, but an entity which animates the body and experiences the world through the body, as well as physically interacting with the people and objects in its environment. However, this soul also possesses non-bodily capacities (reason and free will) which enable its bodily movements to be governed (on many, if not all occasions) by reason.

There are strong indications that the human brain has undergone an unparalleled degree of evolution in its recent history, pointing to the fact that its evolution may have been intelligently directed. According to a report by Alok Jha in The Guardian (29 December 2004), titled, Human brain result of ‘extraordinarily fast’ evolution:

The sophistication of the human brain is not simply the result of steady evolution, according to new research. Instead, humans are truly privileged animals with brains that have developed in a type of extraordinarily fast evolution that is unique to the species.

“Simply put, evolution has been working very hard to produce us humans,” said Bruce Lahn, an assistant professor of human genetics at the University of Chicago and an investigator at the Howard Hughes Medical Institute…

Prof Lahn’s team examined the DNA of 214 genes involved in brain development in humans, macaques, rats and mice.

By comparing mutations that had no effect on the function of the genes with those mutations that did, they came up with a measure of the pressure of natural selection on those genes.

The scientists found that the human brain’s genes had gone through an intense amount of evolution in a short amount of time – a process that far outstripped the evolution of the genes of other animals.

“We’ve proven that there is a big distinction,” Prof Lahn said. “Human evolution is, in fact, a privileged process because it involves a large number of mutations in a large number of genes.

“To accomplish so much in so little evolutionary time – a few tens of millions of years – requires a selective process that is perhaps categorically different from the typical processes of acquiring new biological traits.”

Professor Lahn explains the extremely fast evolution of the human brain in terms of social co-operation. “As humans become more social, differences in intelligence will translate into much greater differences in fitness, because you can manipulate your social structure to your advantage,” he said. The abstract of his paper is here.

That explanation may well be true, up to a point. But when the degree of co-operation required presupposes that the community of individuals are able to communicate using semantic language, then it is clear that no materialistic account of brain evolution is going to do the trick: material processes, as I argued above, are incapable by their very nature of bearing a semantic meaning, in their own right.

|

Heidelberg man. Image courtesy of Wikipedia.

In my post, Who was Adam and when did he live? Twelve theses and a caveat (October 4, 2013), I argued that Heidelberg man (Homo heidelbergensis), a creature whose brain size (1100-1400 cubic centimeters) was more or less equivalent to ours and who appeared at least 700,000 years ago and perhaps as early as 1,300,000 years ago, must have practiced life-long monogamy. Monogamy would have been necessary in order to rear children whose prolonged infancy and whose big brains, which required a lot of energy, would have made it impossible for their mothers to feed them without a committed husband who would provide for the family. Life-long monogamy in human beings requires a high level of self-control, which would be impossible without rationality. In his article, Paleolithic public goods games: why human culture and cooperation did not evolve in one step (Biology & Philosophy (2010) 25:53–73), Benoit Dubreuil argues that around 700,000 years ago, big-game hunting (which is highly rewarding in terms of food, if successful, but is also very dangerous for the hunters, who might easily get gored by the animals they are trying to kill) and life-long monogamy (for the rearing of children whose prolonged infancy and whose large, energy-demanding brains would have made it impossible for their mothers to feed them alone, without a committed husband who would provide for the family) became features of human life. Dubreuil refers to these two activities as “cooperative feeding” and “cooperative breeding,” and describes them as “Paleolithic public good games” (PPGGs).

Heidelberg man is also known to have hunted big game – an activity which is highly rewarding in terms of food, if successful, but is also very dangerous for the hunters, who sometimes get gored by the animals they are trying to kill. While undertaking such a risky activity, the temptation to put one’s own life before the good of the group and to run away from a threatening-looking animal would have been high. Willingness to put one’s own life at risk for the good of the group presupposes the ability to keep selfish impulses in check, and to conceptualize the “greater good,” both of which would be impossible without rationality. Dubreuil (2010) argues that changes in the brain’s prefrontal cortex at the time when Heidelberg man first appeared were what made this impulse control possible.

|

A late Acheulean hand-axe from Boxgrove, England, made by Heidelberg man. Image courtesy of Wikipedia.

The Late Acheulean tools made by Heidelberg man point to the fact that he was capable of using language. A 2011 essay by Dietrich Stout and Thierry Chaminade, titled, Stone tools, language and the brain in human evolution (Philosophical Transactions of the Royal Society B, 12 January 2012, vol. 367, no. 1585, pp. 75-87) lends support to the view that the Late Acheulean tools made by Heidelberg man required a high level of cognitive sophistication to produce, in addition to long hours of training for novices. This training would have included the use of intentional communication, which the authors characterize as “purposeful communication through demonstrations intended to impart generalizable (i.e. semantic) knowledge about technological means and goals, without necessarily involving pantomime.” It is still an open question, however, whether the prehistoric creators of Late Acheulean tools would have needed to express the semantic content of their thoughts in sentences, when training novices.

Now, Professor Kemp and I would both agree that having a big brain doesn’t automatically give you the ability to use language, engage in long-range planning, or feel empathy for other individuals (which, as I pointed out in my post, other animals do not genuinely feel). As we have seen, brains are by their very nature incapable of semantic reasoning. Thus Heidelberg man was a creature who naturally required the use of incorporeal reason, in order to survive as a species, and hence, in order to be the kind of creature that it was.

I conclude that since God does nothing in vain, He would not have made a creature requiring the use of reason in order to survive, without endowing such a creature with reason. God must therefore have endowed each individual in the species Homo heidelbergensis (Heidelberg man) with a spiritual and immortal soul, in order for it to flourish, because its body was the kind of body that naturally required a spiritual soul.

It follows from the above considerations that while the existence of cognitive duplicates, considered in themselves, is really (i.e. metaphysically) possible impossible, their existence is neither temporally nor theologically possible.

Conclusion

I hope that this article of mine will stir up a lively debate among readers, and I welcome input from all sides. I imagine few people will agree with my conclusions, but if you disagree, please let us hear why. Let the debate begin!