From the days of Plato in The Laws, Bk X on, design thinkers have usually been inclined to think in terms of necessity, chance and choice when they analyse causal factors. In recent days, though, the reality of chance and its proper definition have been challenged, and not just here at UD.

From the days of Plato in The Laws, Bk X on, design thinkers have usually been inclined to think in terms of necessity, chance and choice when they analyse causal factors. In recent days, though, the reality of chance and its proper definition have been challenged, and not just here at UD.

A glance at the target to the left will definitely show the typical kind of scatter that is in effect uncontrollable, even after careful and skilled efforts to get accuracy and precision. Here, the shooter- gun- range combination is definitely hitting to the left and slightly high [NW quadrant], with a significant amount of scatter. We see here both want of accuracy and want of precision in the result, and could– if we wanted to, analyse the result statistically to generate a model based on random variables. Such is routinely done in scientific contexts, i.e. chance appears to be real, it appears in the guise of a causal factor leading to observable effects distinguishable from bias and from proximity to an intended target. Moreover, plainly, it can be studied using commonly used scientific methods.

But, some would argue, once the gun, shooter, target and range are set, and the trigger is pulled, the result is a foreordained conclusion.

See, no need for chance.

Especially, chance conceived as “Events and outcomes entirely unforeseen, undirected and unintended by any mind.”

However, this is not the only view of chance that is reasonable, especially in a scientific context.

Chance causal factors — as opposed to “events” (which are outcomes) can be seen as being relevant in three main senses:

1: For macroscopic systems, we often have uncontrolled or even uncontrollable fine factors in a situation that vary across a possible range, and have a cumulative effect of triggering or influencing the course of events so that we see outcomes that are highly variable under similar initial circumstances [i.e. “highly contingent”] and which tend to fit with mathematical random variable, statistical models. (The above target is a good example, for the shooter presumably tried to hit the black ring with each shot, but could not fully compensate for factors leading to both bias and scatter. This is common in experimental work.)

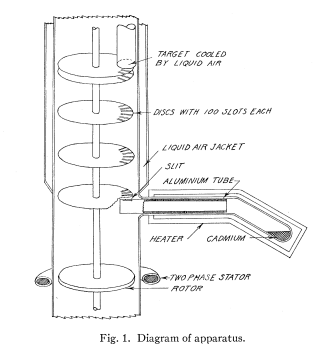

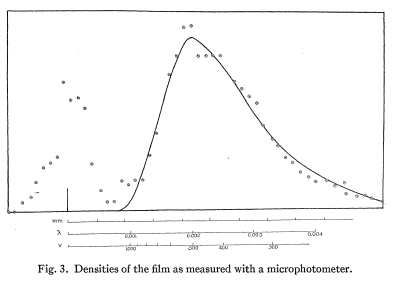

2: In microscopic phenomena like the behaviour of a collection of gas molecules [e.g. cf here], there is a pattern of similar, empirically random statistical distributions of positions, speeds, energy and the like that give rise to temperature, pressure, heat capacity, diffusion effects, Brownian motion, viscosity effects etc etc based on those random distributions. (One can even measure that distribution of molecular speeds with suitable apparatus and see that it is pretty much as the random models predict. This is of course no deductive proof of the reality of randomness and chance in the behaviour of molecules, but it is substantial and decisive empirical warrant for understanding the behaviour on those terms. Warrant that is taken seriously.)

3: In quantum cases like radioactive decay, there seems to be an inherent random distribution that gives rise to observed decay patterns of populations of radioactive atoms and many other phenomena.

Does accepting the reality of chance phenomena as a causal factor on these terms commit us to the concept that the factors are unforeseen by and unforeseeable to any mind?

Not at all.

The mind usually in view here in the first instance is the human mind. But that we may not foresee the exact circumstances or that we — being both finite and fallible –cannot trace their exact influences and so predict outcomes in the fine, does not imply that no-one could. Indeed, if we consider the mind of God (putting on metaphysical caps for a moment), we see that he is usually deemed to be present everywhere and every-when, so “fore-sight” is not applicable to him before whom all things are present. But, then, just because God may know the course of events, does not mean that he is the triggering cause or the determining cause. If he has given freedom of choice to humans — as the foundation of responsible decision and capacity to love [the root, in turn, of moral virtue], why should he not give freedom of action within laws to atoms also? Would that trammel on his sovereignty in any way that is material?

I doubt it.

D J Bartholomew has aptly summed up my perspective in these words form his preface to God of Chance:

. . . chance has to be seen as within the Providence of God. It is not something which requires the abolition of theism nor is it an illusion. We increasingly use chance as a tool in scientific work and it would surely be surprising that God had not got there before us. [p. vii, 2005 online edition; Emphasis original.]

All that we need for the sort of scientific, mathematical and empirical work above, is to recognise that there are often fine-level circumstances and factors that may vary in ways uncontrolled or uncontrollable by or even unknown to us, that yield considerable contingency in outcomes, that follow statistical, probabilistic distributions. This, we may give as a working scientist’s first, rough description of chance as a causal factor. For such, the paradigm example would be a dropped, tumbling, fair six-sided cubical die that — thanks to the initial circumstances that happen to influence the non-linear, fine difference amplifying action of its eight corners and twelve edges — settles to read from the set {1, 2, 3, 4, 5, 6} effectively at random; with in this case a flat distribution. Two such dice will have a sum that peaks at 7 and ranges from 2 to 12.

So, we have a way to understand chance in empirical contexts that gives it real causal effect and without running into unnecessary metaphysical thickets. That is (scooping out and refining a bit on the description above):

Chance causal factors: clusters of fine-level initial circumstances and factors in observable situations that may vary in ways uncontrolled or uncontrollable by — or even unknown to — us [i.e. the scientific investigators], that yield considerable contingency in observable outcomes under closely similar initial conditions, and that follow statistical, probabilistic distributions.

This working definition is close to two senses in the Collins English Dictionary:

chance [tʃɑːns]n1. a. the unknown and unpredictable element that causes an event to result in a certain way rather than another, spoken of as a real force . . . . 5. (Mathematics & Measurements / Statistics) the extent to which an event is likely to occur; probability [HarperCollins Publishers, 2003]

Applying to the origin of life challenge, the usual context is to imagine with Darwin, as he wrote in his 1872 letter to Hooker:

It is often said that all the conditions for the first production of a living organism are present, which could ever have been present. But if (and Oh! what a big if!) we could conceive in some warm little pond, with all sorts of ammonia and phosphoric salts, light, heat, electricity, etc., present, that a protein compound was chemically formed ready to undergo still more complex changes, at the present day such matter would be instantly devoured or absorbed, which would not have been the case before living creatures were formed . . .

Such a prebiotic soup, whether in his pond or ocean thermal vents or a moon of Jupiter or a comet, would certainly be subject to the standard chemical reaction kinetics and thermodynamics, which are sharply influenced by the — recall, empirically observed — random agitations of molecules that among other things give rise to what we measure as temperature. And so, we are indeed looking at the need to assemble key organic macromolecules by chance driven thermodynamic processes, and so also at the need to search vast configuration spaces of contingent possibilities, to explain the origin of life based on Carbon chemistry macromolecules organised in complex highly specific ways that use contingency but defy the astonishing odds that a chance based process would face.

Berlinsky’s 2006 critique is apt:

At the conclusion of a long essay, it is customary to summarize what has been learned. In the present case, I suspect it would be more prudent to recall how much has been assumed:

First, that the pre-biotic atmosphere was chemically reductive; second, that nature found a way to synthesize cytosine; third, that nature also found a way to synthesize ribose; fourth, that nature found the means to assemble nucleotides into polynucleotides; fifth, that nature discovered a self-replicating molecule; and sixth, that having done all that, nature promoted a self-replicating molecule into a full system of coded chemistry.

These assumptions are not only vexing but progressively so, ending in a serious impediment to thought. That, indeed, may be why a number of biologists have lately reported a weakening of their commitment to the RNA world altogether, and a desire to look elsewhere for an explanation of the emergence of life on earth. “It’s part of a quiet paradigm revolution going on in biology,” the biophysicist Harold Morowitz put it in an interview in New Scientist, “in which the radical randomness of Darwinism is being replaced by a much more scientific law-regulated emergence of life.”

Morowitz is not a man inclined to wait for the details to accumulate before reorganizing the vista of modern biology. In a series of articles, he has argued for a global vision based on the biochemistry of living systems rather than on their molecular biology or on Darwinian adaptations. His vision treats the living system as more fundamental than its particular species, claiming to represent the “universal and deterministic features of any system of chemical interactions based on a water-covered but rocky planet such as ours.”

This view of things – metabolism first, as it is often called – is not only intriguing in itself but is enhanced by a firm commitment to chemistry and to “the model for what science should be.” It has been argued with great vigor by Morowitz and others. It represents an alternative to the RNA world. It is a work in progress, and it may well be right. Nonetheless, it suffers from one outstanding defect. There is as yet no evidence that it is true . . .

This is of course the basis for the tendency of evolutionary materialism advocates to dismiss the OOL question as irrelevant, or to trot out imaginary scenarios whereby autocatalysis occurs and some short molecules under highly controlled circumstances set up by highly intelligent chemists, replicate themselves. On the contrary, then, its significance is this: we here see that the Darwinian tree of life has no credible root.

A rootless tree, of course, cannot stand in the storms.

Going on, when we must account for the origin of novel body plans, we see a claim that accumulated accidents, filtered through differences in reproductive success, were able to account for the diversification of life from trees and bees to birds, turtles, bats, whales, apes and us. There is — after 150 years of effort, 1/4 million fossil species and countless millions of specimens form all over the world — of course a paucity of fossil evidence for such body plan origination by chance variation and natural selection etc, as Gould admitted in his The Structure of Evolutionary Theory (2002):

. . . long term stasis following geologically abrupt origin of most fossil morphospecies, has always been recognized by professional paleontologists. [[p. 752.]

. . . . The great majority of species do not show any appreciable evolutionary change at all. These species appear in the section [[first occurrence] without obvious ancestors in the underlying beds, are stable once established and disappear higher up without leaving any descendants.” [[p. 753.]

. . . . proclamations for the supposed ‘truth’ of gradualism – asserted against every working paleontologist’s knowledge of its rarity – emerged largely from such a restriction of attention to exceedingly rare cases under the false belief that they alone provided a record of evolution at all! The falsification of most ‘textbook classics’ upon restudy only accentuates the fallacy of the ‘case study’ method and its root in prior expectation rather than objective reading of the fossil record. [[p. 773.]

More importantly, as Meyer pointed out in his 2004 PBSW article that passed proper peer review by “renowned” scientists:

In order to explain the origin of the Cambrian animals, one must account not only for new proteins and cell types, but also for the origin of new body plans . . . Mutations in genes that are expressed late in the development of an organism will not affect the body plan. Mutations expressed early in development, however, could conceivably produce significant morphological change (Arthur 1997:21) . . . [[but] processes of development are tightly integrated spatially and temporally such that changes early in development will require a host of other coordinated changes in separate but functionally interrelated developmental processes downstream. For this reason, mutations will be much more likely to be deadly if they disrupt a functionally deeply-embedded structure such as a spinal column than if they affect more isolated anatomical features such as fingers (Kauffman 1995:200) . . . McDonald notes that genes that are observed to vary within natural populations do not lead to major adaptive changes, while genes that could cause major changes–the very stuff of macroevolution–apparently do not vary. In other words, mutations of the kind that macroevolution doesn’t need (namely, viable genetic mutations in DNA expressed late in development) do occur, but those that it does need (namely, beneficial body plan mutations expressed early in development) apparently don’t occur.6 [“The origin of biological information and the higher taxonomic categories,” in Proceedings of the Biological Society of Washington 117(2):213-239. 2004.]

Just for clarity, Wiki gives a summary definition of “mutation”:

In molecular biology and genetics, mutations are changes in a genomic sequence: the DNA sequence of a cell’s genome or the DNA or RNA sequence of a virus. They can be defined as sudden and spontaneous changes in the cell. Mutations are caused by radiation, viruses, transposons and mutagenic chemicals, as well as errors that occur during meiosis or DNA replication.[1][2][3] They can also be induced by the organism itself, by cellular processes such as hypermutation.

Mutation can result in several different types of change in sequences;(DNA) these can either have no effect, alter the product of a gene, or prevent the gene from functioning properly or completely. Studies in the fly Drosophila melanogaster suggest that if a mutation changes a protein produced by a gene, this will probably be harmful, with about 70 percent of these mutations having damaging effects, and the remainder being either neutral or weakly beneficial.[4] Due to the damaging effects that mutations can have on genes, organisms have mechanisms such as DNA repair to remove mutations.[1]

So, a significant proportion of mutations are assignable to chance circumstances in the sense already discussed, e.g. radiation ionising H2O molecules that then react with the DNA etc, or chemicals tearing up and rearranging the same etc. Or, just plain “errors,” as noted.

So also, we can clearly see that chance circumstances leading to statistically distributed outcomes, are assigned a causative role in biological evolution. Indeed, we may reduce things to an expression:

i: chance mutations are the usual source cited for many of the chance variations that are held to feed into

ii: differential reproductive success (natural selection) across competing populations [note, this SUBTRACTS “less fit” sub populations, i.e. it removes information from the gene pool, it is a misnomer to summarise the darwinian model of evolution by this subtractive element: “survival of the fittest” does not explain their arrival], thus

iii: yielding descent with modification across time; i.e. evolution; where

1v: we may summarise this primary Darwinian evolutionary mechanism in the “equation”:

CV + DRS/NS –> DWM = Evo

And of course, many evolutionary materialist thinkers have a very high estimation of the transformative capacity of such chance driven processes, e.g. as UD’s News snipped from Monod for us:

We call these events [mutations] accidental; we say they are random occurrences. And since they constitute the only possible source of modification in the genetic text, itself the sole repository of the organism’s hereditary structures, it necessarily follows that chance alone is at the source of every innovation, of all creation in the biosphere. Pure chance, absolutely free but blind, at the very root of the stupendous edifice of evolution: this central concept of modern biology is no longer one among other possible or even conceivable hypotheses. It is today the sole conceivable hypothesis, the only one that squares with observed and tested fact. And nothing warrants the supposition – or the hope – that on this score our position is likely ever to be revised. [Chance and Necessity. An Essay on the Natural Philosophy of Modern Biology (New York: Knopf, 1971)]

But, does chance have that sort of capability? I think not.

To see why, let us look at a thought experiment based on a 73 member string of128-sided dice similar to the 100-sided Zocchihedron:

______________

>>Thought Expt:

To illustrate necessity vs chance vs choice, in a sense relevant to the concept of CSI, and more particularly FSCI.

1: Imagine a 128-side die (similar to the 100 side Zocchihedra that have been made, but somehow made to be fully fair) with the character set for the 7-bit ASCII codes on it.

2: Set up a tray as a string with 73 such in it, equivalent to 500 bits of info storage, or about 10^150 possibility states.

3: Convert the 10^57 atoms of our solar system into such trays, and toss them for c 5 bn years, a typical estimate for the age of the solar system, scanning each time for a coherent message of 73 characters in English such as the 1st 73 characters for this post or a similar message.

4: the number of runs will be well under 10^102, and so the trays could not sample as much as 1 in 10^48 of the configs of the 10^150 for the system.

5: So, if something is significantly rare in the space W of possibilities, i.e it is a cluster of outcomes E comprising a narrow and unrepresentative zone T, the set of samples is maximally unlikely to hit on any E in T.

6: And yet the first 73 characters of this post were composed by intelligent choice in a few minutes, indeed I think less than one.

7: We thus see how chance contingency is deeply challenged on the scope of the resources of our solar system, to create an instance of such FSCI, while choice directed by purposeful intelligence routinely does such. So, we see why FSCI is seen as a reliable sign of choice not chance.

8: Likewise, if we were to take the dice and drop them, reliably they would fall. Indeed we can characterise the relevant differential equations that under given initial circumstances will reliably predict the observed natural regularity of falling.

9: Thus we see mechanical necessity as characterised by natural regularities of low contingency.

10: This leads to the explanatory filter used in design theory, whereby natural regularities of an aspect of a phenomenon, lead to the explanation, law:

11: High contingency indicates the relevant aspect is driven by chance sand/or choice, with the sort of distinguishing sign like FSCI — per the exercise just above — reliably highlighting choice as best explanation.>>

______________

In short, it is reasonable and empirically well warranted to examine causal patterns tracing to necessity and/or chance and/or choice, and to investigate them scientifically on tested, empirically reliable signs. END

===========

F/N: Paper on the original empirical verification of the Maxwell distribution, Eldridge, 1927:

Fig. a: Apparatus for velocity filtering and measuring molecular beam intensity

Fig. b: Initial results

PS: The online book, God of Chance, by D J Bartholomew (2005 electronic update on the 1984 SCM Press edition), is well worth reading.