[UD ID Founds Series, cf. Bartlett on IC]

Going onward, the proposed spontaneous, chance plus necessity origin of Carbon chemistry, aqueous medium, cell-based life would imply the spontaneous creation of the observed von Neumann Self-Replicator [vNSR] based self-duplication mechanism, which is:

a] irreducibly complex,

b] functionally specific,

c] code-based, with use of data structures on a storage “tape” [i.e a language based phenomenon],

d] algorithmic and

e] dependent on complex, functionally specific, highly coordinated, organised implementing nanomolecular machinery:

Now, following von Neumann generally (and as previously noted in brief), such a machine requires . . .

Also, parts (ii), (iii) and (iv) are each necessary for and together are jointly sufficient to implement a self-replicating machine with an integral von Neumann universal constructor. That is, we see here an irreducibly complex set of core components that must all be present in a properly organised fashion for a successful self-replicating machine to exist. [Take just one core part out, and self-replicating functionality ceases: the self-replicating machine is irreducibly complex (IC).]

This irreducible complexity is compounded by the requirement (i) for codes, requiring organised symbols and rules to specify both steps to take and formats for storing information to express required algorithms and data structures. The only known source of languages, and of algorithms is intelligence.

Immediately, that sort of complex specificity and integrated, irreducibly complex organisation mean that we are looking at islands of organised function for both the machinery and the information in the wider sea of possible (but overwhelmingly mostly non-functional) configurations of components.

In short, outside such functionally specific — thus, isolated — information-rich hot (or, “target”) zones, want of correct components and/or of proper organisation and/or co-ordination will block function from emerging or being sustained across time from generation to generation. That is, until you reach the shores of an island of function, there simply is no hill to climb. So, once the set of possible configurations is large enough and the islands of function are credibly sufficiently specific/isolated, it is unreasonable to expect such function to arise from chance, or from chance circumstances driving blind natural forces under the known laws of nature in a regime of trial and error/success.

And, since such a vNSR is a requisite for reproduction of the sort of cell-based life we actually observe, absent a chance plus necessity mechanism backed up by observations, evolutionary materialistic accounts of the origin and diversification of life lack both a root and a trunk.

Other than, in the imaginative reconstructions of those who teach such speculations as “science.”

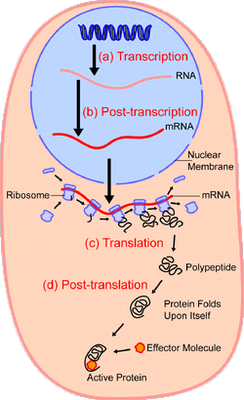

[U/D, Dec 2, 2011] As an illustration, let us examine the protein synthesis process:

Video zoom-in, courtesy Vuk Nikolic:

[vimeo 31830891]

This problem extends to the issue of missing main branches in the Darwinian tree of life along the usual geochronological timeline, as we can see from Gould’s key observations on speciation — the gateway to body-plan level evolution, and his similar remarks on the trade secret of Paleontology:

. . . long term stasis following geologically abrupt origin of most fossil morphospecies, has always been recognized by professional paleontologists. [[The Structure of Evolutionary Theory (2002), p. 752.]

. . . . The great majority of species do not show any appreciable evolutionary change at all. These species appear in the section [[first occurrence] without obvious ancestors in the underlying beds, are stable once established and disappear higher up without leaving any descendants.” [[p. 753.]

. . . . proclamations for the supposed ‘truth’ of gradualism – asserted against every working paleontologist’s knowledge of its rarity – emerged largely from such a restriction of attention to exceedingly rare cases under the false belief that they alone provided a record of evolution at all! The falsification of most ‘textbook classics’ upon restudy only accentuates the fallacy of the ‘case study’ method and its root in prior expectation rather than objective reading of the fossil record. [[p. 773.]

And,

“The absence of fossil evidence for intermediary stages between major transitions in organic design, indeed our inability, even in our imagination, to construct functional intermediates in many cases, has been a persistent and nagging problem for gradualistic accounts of evolution.” [[Stephen Jay Gould (Professor of Geology and Paleontology, Harvard University), ‘Is a new and general theory of evolution emerging?‘ Paleobiology, vol.6(1), January 1980,p. 127.]

“All paleontologists know that the fossil record contains precious little in the way of intermediate forms; transitions between the major groups are characteristically abrupt.” [[Stephen Jay Gould ‘The return of hopeful monsters’. Natural History, vol. LXXXVI(6), June-July 1977, p. 24.]

“The extreme rarity of transitional forms in the fossil record persists as the trade secret of paleontology. The evolutionary trees that adorn our textbooks have data only at the tips and nodes of their branches; the rest is inference, however reasonable, not the evidence of fossils. Yet Darwin was so wedded to gradualism that he wagered his entire theory on a denial of this literal record:

The geological record is extremely imperfect and this fact will to a large extent explain why we do not find intermediate varieties, connecting together all the extinct and existing forms of life by the finest graduated steps [[ . . . . ] He who rejects these views on the nature of the geological record will rightly reject my whole theory.[[Cf. Origin, Ch 10, “Summary of the preceding and present Chapters,” also see similar remarks in Chs 6 and 9.]

Darwin’s argument still persists as the favored escape of most paleontologists from the embarrassment of a record that seems to show so little of evolution. In exposing its cultural and methodological roots, I wish in no way to impugn the potential validity of gradualism (for all general views have similar roots). I wish only to point out that it was never “seen” in the rocks.

Paleontologists have paid an exorbitant price for Darwin’s argument. We fancy ourselves as the only true students of life’s history, yet to preserve our favored account of evolution by natural selection we view our data as so bad that we never see the very process we profess to study.” [[Stephen Jay Gould ‘Evolution’s erratic pace‘. Natural History, vol. LXXXVI95), May 1977, p.14.] [[HT: Answers.com]

In short, ever since Darwin’s day, the overwhelmingly obvious, general observed pattern of the fossil record, plainly, has actually always been sudden appearances, morphological stasis, and disappearance (or continuation into the modern world). Indeed, the Cambrian revolution is a classic in point, as Meyer highlighted in his recent PBSW paper (which passed proper peer review by “renowned” scientists.):

The Cambrian explosion represents a remarkable jump in the specified complexity or “complex specified information” (CSI) of the biological world. For over three billions years, the biological realm included little more than bacteria and algae (Brocks et al. 1999). Then, beginning about 570-565 million years ago (mya), the first complex multicellular organisms appeared in the rock strata, including sponges, cnidarians, and the peculiar Ediacaran biota (Grotzinger et al. 1995). Forty million years later, the Cambrian explosion occurred (Bowring et al. 1993) . . . One way to estimate the amount of new CSI that appeared with the Cambrian animals is to count the number of new cell types that emerged with them (Valentine 1995:91-93) . . . the more complex animals that appeared in the Cambrian (e.g., arthropods) would have required fifty or more cell types . . . New cell types require many new and specialized proteins. New proteins, in turn, require new genetic information. Thus an increase in the number of cell types implies (at a minimum) a considerable increase in the amount of specified genetic information. Molecular biologists have recently estimated that a minimally complex single-celled organism would require between 318 and 562 kilobase pairs of DNA to produce the proteins necessary to maintain life (Koonin 2000). More complex single cells might require upward of a million base pairs. Yet to build the proteins necessary to sustain a complex arthropod such as a trilobite would require orders of magnitude more coding instructions. The genome size of a modern arthropod, the fruitfly Drosophila melanogaster, is approximately 180 million base pairs (Gerhart & Kirschner 1997:121, Adams et al. 2000). Transitions from a single cell to colonies of cells to complex animals represent significant (and, in principle, measurable) increases in CSI . . . .

In order to explain the origin of the Cambrian animals, one must account not only for new proteins and cell types, but also for the origin of new body plans . . . Mutations in genes that are expressed late in the development of an organism will not affect the body plan. Mutations expressed early in development, however, could conceivably produce significant morphological change (Arthur 1997:21) . . . [but] processes of development are tightly integrated spatially and temporally such that changes early in development will require a host of other coordinated changes in separate but functionally interrelated developmental processes downstream. For this reason, mutations will be much more likely to be deadly if they disrupt a functionally deeply-embedded structure such as a spinal column than if they affect more isolated anatomical features such as fingers (Kauffman 1995:200) . . . McDonald notes that genes that are observed to vary within natural populations do not lead to major adaptive changes, while genes that could cause major changes–the very stuff of macroevolution–apparently do not vary. In other words, mutations of the kind that macroevolution doesn’t need (namely, viable genetic mutations in DNA expressed late in development) do occur, but those that it does need (namely, beneficial body plan mutations expressed early in development) apparently don’t occur.

In short, so far as observations are concerned, the main branches of the Darwinian Tree of Life are also missing without leave. What we actually see are twig-level variations within complex, specifically functional forms. It is these twig like variations that are then question-beggingly extrapolated backwards into the imagined grand tree of life.

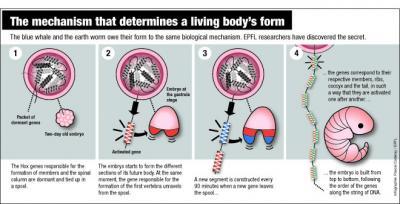

In addition [Oct 15, HT UD News], some breaking news at Sci Daily highlights the algorithmic specificity and patent programming of the unfolding of the body plan through the incremental step by step implementation of the Hox genes set:

Clipping:

Why don’t our arms grow from the middle of our bodies? The question isn’t as trivial as it appears. Vertebrae, limbs, ribs, tailbone … in only two days, all these elements take their place in the embryo, in the right spot and with the precision of a Swiss watch. Intrigued by the extraordinary reliability of this mechanism, biologists have long wondered how it works. Now, researchers at EPFL (Ecole Polytechnique Fédérale de Lausanne) and the University of Geneva (Unige) have solved the mystery . . . .

During the development of an embryo, everything happens at a specific moment. In about 48 hours, it will grow from the top to the bottom, one slice at a time — scientists call this the embryo’s segmentation. “We’re made up of thirty-odd horizontal slices,” explains Denis Duboule, a professor at EPFL and Unige. “These slices correspond more or less to the number of vertebrae we have.”

Every hour and a half, a new segment is built. The genes corresponding to the cervical vertebrae, the thoracic vertebrae, the lumbar vertebrae and the tailbone become activated at exactly the right moment one after another. “If the timing is not followed to the letter, you’ll end up with ribs coming off your lumbar vertebrae,” jokes Duboule. How do the genes know how to launch themselves into action in such a perfectly synchronized manner? “We assumed that the DNA played the role of a kind of clock. But we didn’t understand how.” . . . .

Very specific genes, known as “Hox,” are involved in this process. Responsible for the formation of limbs and the spinal column, they have a remarkable characteristic. “Hox genes are situated one exactly after the other on the DNA strand, in four groups. First the neck, then the thorax, then the lumbar, and so on,” explains Duboule. “This unique arrangement inevitably had to play a role.”

The process is astonishingly simple. In the embryo’s first moments, the Hox genes are dormant, packaged like a spool of wound yarn on the DNA. When the time is right, the strand begins to unwind. When the embryo begins to form the upper levels, the genes encoding the formation of cervical vertebrae come off the spool and become activated. Then it is the thoracic vertebrae’s turn, and so on down to the tailbone. The DNA strand acts a bit like an old-fashioned computer punchcard, delivering specific instructions as it progressively goes through the machine.

“A new gene comes out of the spool every ninety minutes, which corresponds to the time needed for a new layer of the embryo to be built,” explains Duboule. “It takes two days for the strand to completely unwind; this is the same time that’s needed for all the layers of the embryo to be completed.”

This system is the first “mechanical” clock ever discovered in genetics. And it explains why the system is so remarkably precise . . . .

The process discovered at EPFL is shared by numerous living beings, from humans to some kinds of worms, from blue whales to insects. The structure of all these animals — the distribution of their vertebrae, limbs and other appendices along their bodies — is programmed like a sheet of player-piano music by the sequence of Hox genes along the DNA strand.

This is functionally specific, complex organisation and associated information in action, using a classic programming structure, the sequence of steps. With, a timer involved — we have stumbled across Paley’s self-replicating watch, not in a field but in the genome, and as expressed at a crucial time in embryological development. And with a strong hint that we are dealing with an island of function per body plan, as minor disturbances (as Meyer pointed out) are patently likely to be disruptive and possibly fatal.

So, we must ask and try to answer a couple of the begged questions:

Q 1: If these three main points — missing tap-root, missing trunk, missing main branches — are so, then how was the Darwinian/Neo-Darwinian framework established as the standard, confidently presented theoretical explanation of the origin and body plan level diversity of life?

A 1: The establishment is in the main philosophical, not observational. This is aptly summarised by ID thinker, Philip Johnson, in his reply to Lewontin’s a priori materialism, in First Things:

Q 2: But, is design capable of accounting for the origin of life and of body plan level diversity? Or, is the inference to design simply a negative, discredited God of the gaps argument that boils down to giving up on scientific explanation?

A 2: As was pointed out in response to Petrushka, right off, design is a commonly observed and experienced causal mechanism: purposefully directed arrangement of parts towards a desired end. One that often leves behind detectable signs that have long since been tested where we can directly know the causal story as a cross-check, and these tests consistently tell us that the sign is empirically reliable. Plainly, such is not a God of the gaps argument: we explain not on what we do not know, but on what we do know.

So, per the well-known inductive, provisional pattern of warrant that underlies science, absent credible observational evidence that points to such signs not being reliable signs of design as cause, we are entitled to infer from signs such as FSCO/I to their signified causal pattern or process. Further, what hinders that process of inference on matters tied to origin of life and origin of body plans is not the weight or balance of the actual evidence, but the power of an institutionally dominant worldview, evolutionary materialism.

Which is exactly what Harvard evolutionary biologist Richard Lewontin admits (and which Johnson rebukes as cited just above):

It is not that the methods and institutions of science somehow compel us to accept a material explanation of the phenomenal world, but, on the contrary, that we are forced by our a priori adherence to material causes [[another major begging of the question . . . ] to create an apparatus of investigation and a set of concepts that produce material explanations, no matter how counter-intuitive, no matter how mystifying to the uninitiated. Moreover, that materialism is absolute [[i.e. here we see the fallacious, indoctrinated, ideological, closed mind . . . ], for we cannot allow a Divine Foot in the door. The eminent Kant scholar Lewis Beck used to say that anyone who could believe in God could believe in anything. To appeal to an omnipotent deity is to allow that at any moment the regularities of nature may be ruptured, that miracles may happen. [[Perhaps the second saddest thing is that some actually believe that these last three sentences that express hostility to God and then back it up with a loaded strawman caricature of theism and theists JUSTIFY what has gone on before. As a first correction, accurate history — as opposed to the commonly promoted rationalist myth of the longstanding war of religion against science — documents (cf. here, here and here) that the Judaeo-Christian worldview nurtured and gave crucial impetus to the rise of modern science through its view that God as creator made and sustains an orderly world. Similarly, for miracles — e.g. the resurrection of Jesus — to stand out as signs pointing beyond the ordinary course of the world, there must first be such an ordinary course, one plainly amenable to scientific study. The saddest thing is that many are now so blinded and hostile that, having been corrected, they will STILL think that this justifies the above. But, nothing can excuse the imposition of a priori materialist censorship on science, which distorts its ability to seek the empirically warranted truth about our world.]

[[From: “Billions and Billions of Demons,” NYRB, January 9, 1997. Bold emphasis added. ]

“Absolute” and “a priori adherence to materialist causes,” maintained “no matter how counter-intuitive, no matter how mystifying to the uninitiated” are not exactly the stuff of open-minded, empirically driven science.

This is ideological agenda, not science.

And, as was noted, the caricature of theistic thought on science offered by Lewontin as a justification for his attitude, is grossly ill-informed both philosophically and historically. The nature of miracles is such that to stand out as signs from beyuond the ordinary course of events, they require just that: a generally predictable order to the cosmos; and, the explicit teaching of the Judaeo-Christian tradition is that God is the God of Order, not chaos, who upholds creation by his intention that it be inhabited, by the likes of us.

Blend in the equally biblical concept that God wishes for us to discern his hand in nature and to be stewards of our world, and we see that people within that tradition will generally be friendly to the project of exploring and identifying the intelligible principles that drive that order. Which is precisely the documented historical root of modern science: “thinking God’s [creative and sustaining] thoughts after him.”

The myth of an ages long war and irreconcilable hostility between Religion and Science is just that, a C19 rationalist myth long since past its sell-by date. But such (however important) is a bit adrift of the main focus for this post.

We may now return to and summarise the key take-home message:

1 –> Design is a real and observed phenomenon, one that is both meaningful and relevant to the study of the origins of life [unless one wishes to beg the question and decide with Lewontin et al ahead of looking at evidence, that the possibility of deisgn is not to be entertained, regardless of evidence]. We may usefully define such design as the purposeful and intelligent choice and/or arrangement of parts to achieve a goal.

2 –> Artifacts of such design, once we pass a reasonable threshold of functionally specific complexity, are not credibly explained on incremental and cumulative development driven by chance and necessity without intelligence. We are therefore entitled to accept as a scientific conclusion, the verdict of what we do see: that FSCO/I is routinely and only produced by design. FSCO/I, then, is a reliable sign of design.

3 –> Attempts to explain away FSCO/I without reference to the empirically known source — design — typically tell only a part of the story, and for example the genetic algorithms presented as simulations of what more or less plausibly was the case, implicitly assume and impose an intelligently designed smooth fitness function type of model and proceed to do hill-climbing optimisation, within an island of function. That is, such algorithms are implicitly targeted and constrained, intelligently designed searches.

4 –> They explain origins, in short by yet another switcheroo: intelligent design and artificial selection are substituted for the actual chance variation and natural selection that were to be tested. Then, ironically, the result is presented as though it undermines what it demonstrates: the power of intelligent design.

5 –> Going to the actual record of the past, ever since Darwin’s day 150 years ago, the fossil record has simply not provided strong support for a gradually branching evolutionary tree of life model, driven by Darwinian natural selection. This, with now over 250,000 fossil species studied, and millions of fossils in museums, with billions more seen in the known beds. Instead we see islands of function, and a pattern of sudden appaearance, stasis and gaps, as Gould noted on. An astonishing related case in point from the now popular molecular evidence is the result of kangaroo/human genome comparison, where on a timeline that is said to have diverged 150 million years ago on the evolutionary tree of life:

Like the o’possum, there are about 20,000 genes in the kangaroo’s genome . . . . That makes it about the same size as the human genome, but the genes are arranged in a smaller number of larger chromosomes.

“Essentially it’s the same houses on a street being rearranged somewhat,” Graves says.

“In fact there are great chunks of the [human] genome sitting right there in the kangaroo genome.”

6 –> In this overall context, Orgel and Wicken give us a classic contrast, one that highlights how the FSCO/I in life is distinct from the sort of patterns that are produced by blind chance and/or mechanical necessity:

Orgel:

Wicken:

7 –> Let us focus:

organized systems must be assembled element by element according to an [[originally . . . ] external ‘wiring diagram’ with a high information content . . . Organization, then, is functional complexity and carries information. It is non-random by design or by selection, rather than by the a priori necessity of crystallographic ‘order.’

8 –> It is that requirement for specification of elements, relationships and integration through a “wiring diagram” that is the root of the information-rich functionally specific organisation that is the heart of the design inference, once a sufficiently complex threshold is reached.

9 –> Wicken, of course, hoped that “selection” would include what the early Darwinists termed “natural selection,” or “survival of the fittest.”

10 –> But in fact, that is the the fatal weakness. For, such selection is a weeding out, necessarily a subtraction of information. It is the chance variation component of the variation-selection mechanism that is the hoped for source of increments in information. And, it is only when we have an integrated functional, self-replicating, embryologically feasible body plan, that incremental success leads to hill-climbing.

11 –> This leads to the implicit commitment to requiring that the domain of life forming a continent of function that can be traversed by a Darwinian tree of life, from tap-root on to shoot, branches and twigs. Precisely what is credibly not there in the fossil record, and precisely what the nature of integrated functionally specific complex organisation dependent on codes, algorithms, molecular execution machines and a von Neumann Self Replication facility would not lead us to expect. (Recall, the deep isolation of protein fold domains, per Axe’s findings of such being of order 1 in 10^70 or thereabouts of amino acid sequences. Similarly, in general, it is utterly implausible that something like a Hello World program could be be incrementally converted into say an operating sytem where at each incremental step the resulting program is functional.)

12 –> In short, the reasonable conclusion is that the body plans of the observed world of life are based on islands of complex, specific function in a much larger configuration space of non-functional forms, starting from the first cell based life. In such a context, the observed variability and adaptability of life forms within islands of function — there are no actual observations of the origin of novel body plans — is best understood as an intentional design feature: flexibility and adaptability, giving robustness.

So, on the evidence, we are epistemologically entitled to confidently infer that the FSCO/I that appears in the world of life is the product of the only observed, empirically known source of such an information-rich pattern: design.

Wallace, co-founder of the theory of evolution, thus had a serious point, when he characterised “the world of life” as: a manifestation of Creative Power, Directive Mind and Ultimate Purpose. END