[Continued from here]

In steps:

1: What it is — Wiki has a helpful summary:

>> A gene knockout (abbreviation: KO) is a genetic technique in which an organism is engineered to carry genes that have been made inoperative (have been “knocked out” of the organism). Also known as knockout organisms or simply knockouts, they are used in learning about a gene that has been sequenced, but which has an unknown or incompletely known function. Researchers draw inferences from the difference between the knockout organism and normal individuals. >>

a –> That is, the idea is that genes effect proteins that function (do jobs) in the cell, so if a gene is knocked out, the function will be lost with the lost protein

b –> So, the animal [mice are typical] with the knocked out gene will have a gap in function relative to a known typical mouse.

c –> Notice, the heart of the technique is the functional part concept: the protein does a job, so if lost that job is blocked, and we may infer the function from the difference between the KO animal and the normal one, e.g. turn off a gene for hairiness or one that controls how fat an animal tends to be etc.

d –> And, on logic: if we have a cluster of parts that each of them is necessary to function, and all together are jointly sufficient for function, we have an irreducibly complex entity.

e –> So, what Scott Minnich did is reasonable . . .

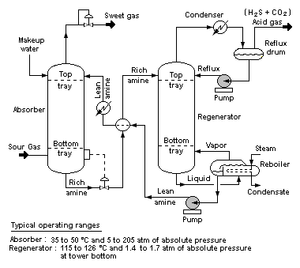

2: How tweredun — here, the Cytokines & Cells Encyclopedia article is helpful on two typical techniques (and has a handy diagram):

>> (a) Use of insertion type vectors involves a single cross-over between genomic target sequences and homologous sequences at either end of the targeting vector. The neomycin resistance gene contained within the vector serves as a positive selectable marker.

(b) Gene targeting using replacement type vectors requires two cross-over events. The positive selection marker (neo) is retained while the negative selectable marker (HSV thymidine kinase) is lost. The advantage of this system is the fact that cells harboring randomly and unspecifically integrated gene constructs still carry the thymidine kinase gene. These cells can be eliminated selectively by using thymidine kinase as a selective marker . . . >>

f –> The idea here for (a) is that the target point in a gen is split, the sequence is duplicated on either side, and a marker is pushed in the middle, breaking gene function and marking where the break was made so it can be observationally confirmed:

g –> original DNA sequence:

– 1 2 3 4 5 6 7 8 9 10 –

h –> to — with “n e o” as marker:

– 1 2 3 4 5 6 7 — n e o – 2 3 4 5 6 7 8 9 10 –

i –> For (b), go look at the diagram in the linked article.

3: In praxis — Wiki (same article) is helpful again:

>> Knockout is accomplished through a combination of techniques, beginning in the test tube with a plasmid, a bacterial artificial chromosome or other DNA construct, and proceeding to cell culture. Individual cells are genetically transformed with the DNA construct. Often the goal is to create a transgenic animal that has the altered gene. If so, embryonic stem cells are genetically transformed and inserted into early embryos. Resulting animals with the genetic change in their germline cells can then often pass the gene knockout to future generations. >>

j –> In other words, a new constructed clone with the knockout is grown into a full animal from an early embryo.

k –> BTW, something like 15% [~ 1 in 6] of cloned KO mice die in embryonic stages, so the technique reveals the riskiness of mutations for embryonic development. This is itself a challenge to claims that chance mutations played a big role in origin of body plans.

l –> Next, a lab strain can then be created by breeding KO animals — it being much cheaper and more reliable to reproduce the old fashioned way. (There is actually a market in specific strains, for particular types of research, e.g. Methuselah is a long lived mouse strain.)

So, KO studies, an established research technique, is based on the reality of IC in biological organisms.

We already know that this is an informational barrier to evolvability, once we are beyond the FSCO/I type threshold. Also, as Behe pointed out in later studies on patterns of observed evolution with the malaria parasite, there seems to be an empirical barrier at the double-mutation point, i.e. there is a credible edge of evolution. Of course, we may comfortably accept many exceptions to this barrier — once they are empirically demonstrated — and still not have reached the relevant level of challenge: origin of complex organs, facilities or body plan features that have irreducible complexity. And, credibly, the role of the von Neuman-type self replicator in the ability of cells to replicate themselves while being also able to perform metabolism puts an IC barrier right at the origin of life — the root of Darwin’s tree of life — itself.

In addition:

“There is now considerable evidence that genes alone do not control development. For example when an egg’s genes (DNA) are removed and replaced with genes (DNA) from another type of animal, development follows the pattern of the original egg until the embryo dies from lack of the right proteins. (The rare exceptions to this rule involve animals that could normally mate to produce hybrids.) The Jurassic Park approach of putting dinosaur DNA into ostrich eggs to produce a Tyrannosaurus rex makes exciting fiction but ignores scientific fact.” [The Design of Life – William Dembski, Jonathan Wells Pg. 50. Emphasis added. HT: BA 77]

But, there is a fifth barrier involved, which can perhaps be best seen in the recently studied case of the origin of birds. To appreciate the point, let us provide a picture of a flight-type feather to show how it is structured and how it works in context in the wing:

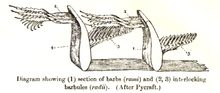

Fig. C: The feather, showing the complex interlocking required for function (Courtesy, Wiki)

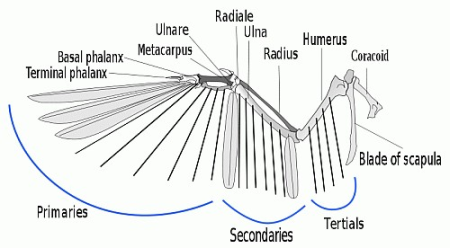

Fig. D: Flight feathers and the wing, which also requires support musculature and sophisticated control and co-ordination (Courtesy, Wiki CCA, L. Shyama))

As ENV currently reports:

In a peer-reviewed paper titled “Evidence of Design in Bird Feathers and Avian Respiration,” [US $ 50 paywall] in International Journal of Design & Nature and Ecodynamics, Leeds University professor Andy McIntosh argues that two systems vital to bird flight–feathers and the avian respiratory system–exhibit “irreducible complexity [defined as Behe does]” . . . .

Regarding the structure of feathers, he argues that they require many features present in order to properly function and allow flight:

[I]t is not sufficient to simply have barbules to appear from the barbs but that opposing barbules must have opposite characteristics – that is, hooks on one side of the barb and ridges on the other so that adjacent barbs become attached by hooked barbules from one barb attaching themselves to ridged barbules from the next barb (Fig. 4). It may well be that as Yu et al. [18] suggested, a critical protein is indeed present in such living systems (birds) which have feathers in order to form feather branching, but that does not solve the arrangement issue concerning left-handed and right-handed barbules. It is that vital network of barbules which is necessarily a function of the encoded information (software) in the genes. Functional information is vital to such systems.

He further notes that many evolutionary authors “look for evidence that true feathers developed first in small non-flying dinosaurs before the advent of flight, possibly as a means of increasing insulation for the warm-blooded species that were emerging.” However, he finds that when it comes to fossil evidence for the evolution of feathers, “[n]one of the fossil evidence shows any evidence of such transitions.”

Regarding the avian respiratory system, McIntosh contends that a functional transition from a purported reptilian respiratory system to the avian design would lead to non-functional intermediate stages. He quotes John Ruben stating, “The earliest stages in the derivation of the avian abdominal air sac system from a diaphragm-ventilating ancestor would have necessitated selection for a diaphragmatic hernia in taxa transitional between theropods and birds. Such a debilitating condition would have immediately compromised the entire pulmonary ventilatory apparatus and seems unlikely to have been of any selective advantage.” With such unique constraints in mind, McIntosh argues that the “even if one does take the fossil evidence as the record of development, the evidence is in fact much more consistent with an ab initio design position – that the breathing mechanism of birds is in fact the product of intelligent design.”

Indeed, the first of these examples is not a new one. The co-founder of evolutionary theory (and an early advocate of “Intelligent Evolution”), Alfred Russel Wallace, argued in The World of Life; A Manifestation of Creative Power, Directive Mind and Ultimate Purpose (Chapman and Hall, 1914 [orig. 1911]), here (36 MB)):

. . . the bird’s wing seems to me to be, of all the mere mechanical organs of any living thing, that which most clearly implies the working out of a pre-conceived design in a new and apparently most complex | and difficult manner, yet so as to produce a marvellously successful result. The idea worked out was to reduce the jointed bony framework of the wings to a compact minimum of size and maximum of strength in proportion to the muscular power employed ; to enlarge the breastbone so as to give room for greatly increased power of pectoral muscles ; and to construct that part of the wing used in flight in such a manner as to combine great strength with extreme lightness and the most perfect flexibility. In order to produce this more perfect instrument for flight the plan of a continuous membrane, as in the flying reptiles (whose origin was probably contemporaneous with that of the earliest birds) and flying mammals, to be developed at a much later period, was rejected, and its place was taken by a series of broad overlapping oars or vanes, formed by a central rib of extreme strength, elasticity, and lightness, with a web on each side made up of myriads of parts or outgrowths so wonderfully attached and interlocked as to form a self-supporting, highly elastic structure of almost inconceivable delicacy, very easily pierced or ruptured by the impact of solid substances, yet able to sustain almost any amount of air-pressure without injury. [287 – 88] . . . .

A great deal has been written on the mechanics of a bird’s flight, as dependent on the form and curvature of the feathers and of the entire wing, the powerful muscular arrangements, and especially the perfection of the adjustment by which during the rapid down-stroke the combined feathers constitute a perfectly air-tight, exceedingly strong, yet highly elastic instrument for flight ; while the moment the upward motion begins the feathers all turn upon their axes so that the air passes between them with hardly any resistance, and when they again begin the down-stroke close up automatic-ally as air-tight as before. Thus the effective down-strokes follow each other so rapidly that, together with the support given by the hinder portion of the wings and tail, the onward motion is kept up, and the strongest flying birds exhibit hardly any undulation in the course they are pursuing. But very little is said about the minute structure of the feathers themselves, which are what renders perfect flight in almost every change of conditions a possibility and an actually achieved result.

But there is a further difference between this instrument of flight and all others in nature. It is not, except during actual growth, a part of the living organism, but a mechanical | instrument which the organism has built up, and which then ceases to form an integral portion of it is, in fact, dead matter. [290 – 1]

In short, Wallace sees in the complex and specific, functional mechanisms and organisation of organs and limbs such as the wings of a bird, marks of purposeful organisation, and underlying intelligent direction; much as Paley had in his day. However, this is seen with a significant difference; to Wallace, the design comes about through evolutionary means, and is rooted in an underlying purpose of designed diversity in nature working through organising principles.

Intelligent design, using evolutionary means, in short.

_____________________

So, we may freely conclude: however we may debate mechanisms, the point remains, that irreducible complexity is a clear obstacle to evolution by chance-based Darwinian type processes of variation and selection by differential reproductive success; especially when the issue of the origin of complex organs and body plans is on the table. In short:

That challenge was unmet in 1859.

It was still unmet in 1911.

It remained unmet in 1996.

And, it is still unmet today.

For, irreducible complexity is a strong, empirically well-supported sign pointing to intelligent design. END