[Continued from here]

An excellent place to begin is back with Dr Dembski’s observation in No Free Lunch, as was already excerpted; but let us remind ourselves:

. . .[From commonplace experience and observation, we may see that:] (1) A designer conceives a purpose. (2) To accomplish that purpose, the designer forms a plan. (3) To execute the plan, the designer specifies building materials and assembly instructions. (4) Finally, the designer or some surrogate applies the assembly instructions to the building materials. (No Free Lunch, p. xi. HT: ENV.) [Emphases and explanatory parenthesis added.]

Let us notice: conceiving a purpose, forming a plan, specifying materials and instructions.

We see here that an agent must act rationally and volitionally, on knowledge and creative imagination. In that context, the complex, specific, functional organisation and associated information is first in the mind, then through various ways and means, it is actualised in the physical world. Choosing an example of composing a text and posting it to UD, we may observe from a previous ID foundations series post:

a: When I type the text of this post by moving fingers and pressing successive keys on my PC’s keyboard,

b: I [a self, and arguably: a self-moved designing, intentional, initiating agent and initial cause] successively

c: choose alphanumeric characters (according to the symbols and rules of a linguistic code) towards the goal [a purpose, telos or “final” cause] of writing this post, giving effect to that choice by

d: using a keyboard etc, as organised mechanisms, ways and means to give a desired and particular functional form to the text string, through

e: a process that uses certain materials, energy sources, resources, facilities and forces of nature and technology to achieve my goal.

. . . The result is complex, functional towards a goal, specific, information-rich, and beyond the credible reach of chance [the other source of high contingency] on the gamut of our observed cosmos across its credible lifespan. In such cases, when we observe the result, on common sense, or on statistical hypothesis-testing, or other means, we habitually and reliably assign outcomes to design.

Now, let us focus on the issue of choice in the context of reason and responsibility:

21 –> If I am only a product of evolutionary materialistic dynamics over the past 13.7 BY and on earth the past 4.6 BY, in a reality that (as Lewontin and ever so many others urge) is wholly material, and so

22 –> I am not sufficiently free to make a truly free choice as a self-moved agent — however I may subjectively imagine myself to be choosing [i.e. immediately, the evolutionary materialistic view implies we are all profoundly and inescapably delusional], then

23 –> Whatever I post is simply the end-product of a chain of cause-effect bonds trailing back to undirected forces of chance and necessity acting across time on matter and energy, forces that are utterly irrelevant to the rationality of ground and consequent, or the duty of sound thinking and decision.

24 –> That this is in fact a fair-comment view of the evolutionary materialistic position, can be seen for one instance from Cornell professor of the history of Biology William Provine’s remarks in his U Tenn Darwin Day keynote address of 1998:

The first 4 implications are so obvious to modern naturalistic evolutionists that I will spend little time defending them. Human free will, however, is another matter. Even evolutionists have trouble swallowing that implication. I will argue that humans are locally determined systems that make choices. They have, however, no free will . . . [Evolution: Free Will and Punishment and Meaning in Life, Second Annual Darwin Day Celebration Keynote Address, University of Tennessee, Knoxville, February 12, 1998 (abstract).]

25 –> This, if true, would immediately and irrecoverably undermine morality. But more than that, if all phenomena in the cosmos are shaped and controlled in the end by blind chance and necessity acting through blind watchmaker, evolutionary dynamics — however mediated — then the credibility of reasoning irretrievably breaks down.

28 –> ID thinker, Philip Johnson’s retort in Reason in the Balance, was apt. Namely: Dr Crick should therefore be willing to preface his books: “I, Francis Crick, my opinions and my science, and even the thoughts expressed in this book, consist of nothing more than the behavior of a vast assembly of nerve cells and their associated molecules.” (In short, as Prof Johnson then went on to say: “[[t]he plausibility of materialistic determinism requires that an implicit exception be made for the theorist.”)

29 –> Actually, this fatal flaw had long since been highlighted by J. B. S. Haldane:

“It seems to me immensely unlikely that mind is a mere by-product of matter. For if my mental processes are determined wholly by the motions of atoms in my brain I have no reason to suppose that my beliefs are true. They may be sound chemically, but that does not make them sound logically. And hence I have no reason for supposing my brain to be composed of atoms.” [“When I am dead,” in Possible Worlds: And Other Essays [1927], Chatto and Windus: London, 1932, reprint, p.209. (Highlight and emphases added.)]

30 –> In short, if the conscious mind is a mere epiphenomenon of brain-meat in action as neural networks fire away through neural networks wired by chance and necessity, then it has no credible capability to rise above cause-effect chains tracing to blind chance and necessity, to achieve responsible reasoning on grounds, evidence, warrant and consequences. We are at reductio ad absurdum.

31 –> In his The Laws, Bk X, 360 BC, Plato showed a more promising beginning-point. Here, he speaks in the voice of the Athenian Stranger:

Ath. . . . when one thing changes another, and that another, of such will there be any primary changing element? How can a thing which is moved by another ever be the beginning of change? Impossible. But when the self-movedchanges other, and that again other, and thus thousands upon tens of thousands of bodies are set in motion, must not the beginning of all this motion be the change of the self-moving principle? . . . . self-motion being the origin of all motions, and the first which arises among things at rest as well as among things in motion, is the eldest and mightiest principle of change, and that which is changed by another and yet moves other is second.

[[ . . . .]

Ath. If we were to see this power existing in any earthy, watery, or fiery substance, simple or compound-how should we describe it?

Cle. You mean to ask whether we should call such a self-moving power life?

Ath. I do.

Ath. And when we see soul in anything, must we not do the same-must we not admit that this is life?

[[ . . . . ]

Cle. You mean to say that the essence which is defined as the self-moved is the same with that which has the name soul?

Ath. Yes; and if this is true, do we still maintain that there is anything wanting in the proof that the soul is the first origin and moving power of all that is, or has become, or will be, and their contraries, when she has been clearly shown to be the source of change and motion in all things?

32 –> So, if we are willing to accept the testimony of our experience, that we are self-moved, reasoning, responsible body-supervising conscious, enconscienced minds, then, we can see how we may use bodily means: brains, sensors, effectors, to interface with and act into the physical world to achieve our purposes, leaving FSCO/I as a trace of that minded intelligent, designing action.

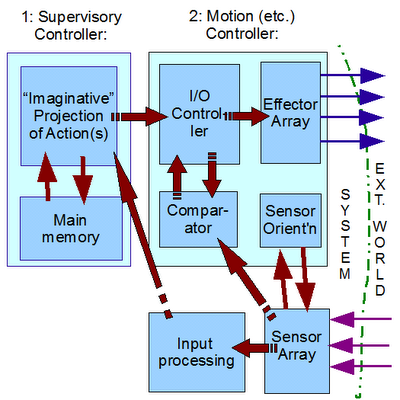

33 –> This brings to bear the relevance of the Derek Smith, two-tier controller, cybernetic model:

Fig. E: The Derek Smith, two-tier controller cybernetic model. (Adapted, Derek Smith.)

34 –> Here, the higher order, supervisory controller intervenes informationally on the lower order loop, and the lower order controller acts as an input-output and processing unit for the higher order unit. (This architecture is obviously also relevant to the development of smart robots.)

35 –> In short, we are not locked up to the notion that mind is at best an epiphenomenon of brain-meat.

36 –> Going further, by taking our experience of ourselves as reasoning, choosing, acting designers who often leave FSCO/I behind in objects, systems, processes and phenomena that reflect counter-flow leading to local organisation, we have a coherent basis for understanding the significance of the inference to design.

_____________

Design points to the designing mind, in sum.

And while that has potential worldview level import, it is no business of science to trouble itself unduly over the possible onward philosophical debates once we can establish the principle of inference to design on reliable empirical signs such as FSCO/I.

At least, if we understand science at its best as:

the unfettered (but ethically and intellectually responsible) progressive pursuit of the empirically evident truth about our world, based on observation, measurement, analysis, theoretical modelling on inference to best explanation, and free, uncensored but mutually respectful discussion among the informed.