In two recent UD threads, frequent commenter AI Guy, an Artificial Intelligence researcher, has thrown down the gauntlet:

Winds of Change, 76:

By “counterflow” I assume you mean contra-causal effects, and so by “agency” it appears you mean libertarian free will. That’s fine and dandy, but it is not an assertion that can be empirically tested, at least at the present time.

If you meant something else by these terms please tell me, along with some suggestion as to how we might decide if such a thing exists or not. [Emphases added]

ID Does Not Posit Supernatural Causes, 35:

Finally there is an ID proponent willing to admit that ID cannot assume libertarian free will and still claim status as an empirically-based endeavor. [Emphasis added] This is real progress!

Now for the rest of the problem: ID still claims that “intelligent agents” leave tell-tale signs (viz FSCI), even if these signs are produced by fundamentally (ontologically) the same sorts of causes at work in all phenomena . . . . since ID no longer defines “intelligent agency” as that which is fundamentally distinct from chance + necessity, how does it define it? It can’t simply use the functional definition of that which produces FSCI, because that would obviously render ID’s hypothesis (that the FSCI in living things was created by an intelligent agent) completely tautological. [Emphases original. NB: ID blogger Barry Arrington, had simply said: “I am going to make a bold assumption for the sake of argument. Let us assume for the sake of argument that intelligent agents do NOT have free will . . . ” (Emphases added.)]

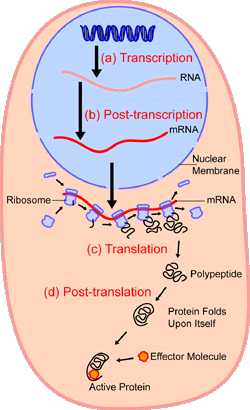

This challenge brings to a sharp focus the foundational issue of counter-flow, constructive work by designing, self-moved initiating, purposing agents as a key concept and explanatory term in the theory of intelligent design. For instance, we may see from leading ID researcher, William Dembski’s No Free Lunch:

. . .[From commonplace experience and observation, we may see that:] (1) A designer conceives a purpose. (2) To accomplish that purpose, the designer forms a plan. (3) To execute the plan, the designer specifies building materials and assembly instructions. (4) Finally, the designer or some surrogate applies the assembly instructions to the building materials. (No Free Lunch, p. xi. HT: ENV.) [Emphases and explanatory parenthesis added.]

This is of course, directly based on and aptly summarises our routine experience and observation of designers in action.

For, designers routinely purpose, plan and carry out constructive work directly or though surrogates (which may be other agents, or automated, programmed machines). Such work often produces functionally specific, complex organisation and associated information [FSCO/I; a new descriptive abbreviation that brings the organised components and the link to FSCI (as was highlighted by Wicken in 1979) into central focus].

ID thinkers argue, in turn, that that FSCO/I in turn is an empirically reliable sign pointing to intentionally and intelligently directed configuration — i.e. design — as signified cause.

And, many such thinkers further argue that:

if, P: one is not sufficiently free in thought and action to sometimes actually and truly decide by reason and responsibility (as opposed to: simply playing out the subtle programming of blind chance and necessity mediated through nature, nurture and manipulative indoctrination)

then, Q: the whole project of rational investigation of our world based on observed evidence and reason — i.e. science (including AI) — collapses in self-referential absurdity.

But, we now need to show that . . .

More subtly — through the question of “counterflow,” i.e. constructive work — the issue AIG raised first surfaces questions on the thermodynamics of energy conversion devices, the link of entropy to information, the way that open systems increase local organisation, and the underlying origin of energy conversion devices that exhibit FSCO/I, especially those in biological organisms.

This issue has been on the table since the very first ID technical book, The Mystery of Life’s Origin [TMLO], by Thaxton, Bradley and Olsen [TBO], in 1984. For, these authors noted as they closed their Ch 7, on the basic thermodynamics of living systems, that:

While the maintenance of living systems is easily rationalized in terms of thermodynamics, the origin of such living systems is quite another matter. Though the earth is open to energy flow from the sun, the means of converting this energy into the necessary work to build up living systems from simple precursors remains at present unspecified (see equation 7-17). The “evolution” from biomonomers of to fully functioning cells is the issue. Can one make the incredible jump in energy and organization from raw material and raw energy, apart from some means of directing the energy flow through the system? In Chapters 8 and 9 we will consider this question, limiting our discussion to two small but crucial steps in the proposed evolutionary scheme namely, the formation of protein and DNA from their precursors.

It is widely agreed that both protein and DNA are essential for living systems and indispensable components of every living cell today.11 Yet they are only produced by living cells. Both types of molecules are much more energy and information rich than the biomonomers from which they form. Can one reasonably predict their occurrence given the necessary biomonomers and an energy source? Has this been verified experimentally? These questions will be considered . . . [Emphasis added. Cf summary in the peer-reviewed journal of the American Scientific Affiliation, “Thermodynamics and the Origin of Life,” in Perspectives on Science and Christian Faith 40 (June 1988): 72-83, pardon the poor quality of the scan. NB: as the journal’s online issues will show, this is not necessarily a “friendly audience” for design thinkers.]

Let us take this up in steps:

1 –> “Counterflow” generally speaks of going opposite to “time’s arrow” [a classic metaphor for the degradation impact of the 2nd law of thermodynamics], by performing constructive work.

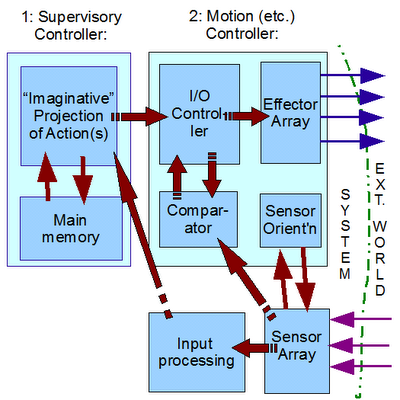

2 –> That is, by in effect harnessing an energy-conversion device, a local increase in order — indeed, in organisation — can be created; according to a pattern, blueprint, plan, or at least an intention. As we may illustrate:

Fig. A: Energy flows and work. The joint action of the first and second laws of thermodynamics shows how a heat engine/energy converter may only partly convert imported energy (which must be in an appropriate form fitted to the device) into work. Specifically, as part (b) shows, increment of heat flow d’Qi from heat source A partly goes into increase of internal energy of device B, dEb, partly into shaft work dW, and partly into exhausted heat increment d’Qo that ends up in heat sink D.

(NB: Under the second law, at each interface where heat flows, increment in entropy, dS >/= d’Qrev/T; T being the relevant absolute temp. In part (a), the loss of heat from A causes B (at a lower temp) to gain heat; A’s loss of heat reduces its entropy, but since B is at a lower temp, its rise in entropy will be greater, so the entropy of the universe as a whole will rise, when the two are netted off.)

__________

3 –> As fig. A shows, open systems can indeed readily — but, alas, temporarily — increase local organisation by importing energy from a “source” and doing the right kind of work. But, generally only in a context of guiding information based on an intent or program, or its own functional organisation, and at the expense of exhausting compensating disorder to some “sink” or other. (NB: here, something like a timing belt and set of cams is a program.)

4 –> Heat –in short: energy moving between bodies due to temperature difference, by radiation, convection or conduction — cannot wholly be converted to work. (Here, the radiant energy flowing out from our sun’s surface at some 6,000 degrees Celsius to earth at some 15 degrees Celsius, average, is a form of heat.)

5 –> Physically, by definition: work is done when applied forces impart motion along their lines of action to their points of application, e.g. when we lift a heavy box to put it on a shelf, we do work. For force F, and distance along line of motion dx, the work is:

dW = F*dx, . . . where, strictly * denotes a “dot product”

6 –> But, that definition does not say anything about whether or not the work is constructive — a tornado ripping off a roof and flying its parts for a mile to land elsewhere has done physical work, but not constructive work.

(Side-bar, constructive work is closely connected to the sort we get paid for: if your work is constructive, desirable and affordable, you get paid for it. [Hence, the connexion between energy use at a given general level of technology and the level of economic activity and national income.])

7 –> Similarly, it says nothing about the origin of the energy conversion device.

8 –> When that device itself manifests functionally specific, complex organisation and associated information — FSCO/I (e.g. a gas engine- generator set or a solar PV panel, battery and wind turbine set, as opposed to, e.g. the natural law-dominated order exhibited by tornadoes or hurricanes as vortexes), we have good reason to infer that the conversion device was designed.

(Side-bar: Now, there is arguably a link between increased information and reduction in degrees of microscopic freedom of distributing energy and mass. Where, entropy is best understood as a logarithmic measure of the number of ways energy and mass can be distributed under a given set of macro-level constraints like pressure, temperature, magnetic field, etc.:

s = k ln w, k being Boltzmann’s constant and w the number of “ways.”

Jaynes therefore observed, aptly [but somewhat controversially]: “The entropy of a thermodynamic system is a measure of the degree of ignorance of a person whose sole knowledge about its microstate consists of the values of the macroscopic quantities . . . which define its [macro-level observable] thermodynamic state. This is a perfectly ‘objective’ quantity . . . There is no reason why it cannot be measured in the laboratory.”[Cited, Harry Robertson, Statistical Thermophysics, Prentice Hall, 1993, p. 36.]

This connects fairly directly to the information as negentropy concept of Brillouin and Szilard, but that is not our focus here, which is instead on the credible source/cause of energy conversion devices exhibiting FSCO/I. As this thought experiment shows [cf.TMLO chs 8 & 9], the correct assembly of such from microscopic components scattered at random in a vat or a pond would indeed drastically reduce entropy and increase the functionality [which would define an observable functional state], but the basic message is that since the scattered microstates so overwhelm the clumped then the functional ones, it is maximally unlikely that such would ever happen spontaneously. Nor, would heating up the pond or striking it with lightning or the like be likely to help matters out.

Just as, we normally observe an ink spot dropped in a vat diffusing throughout the vat, not collecting back together again.

In short, to produce complex, specific organisation to achieve function, the most credible path is to assemble co-ordinated, well-matched parts according to a known good plan.)

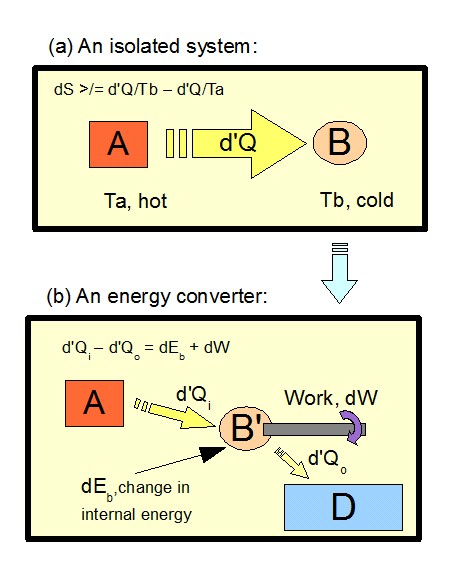

9 –> The reasonableness of the inference from observing a high-FSCO/I energy converter to its having been designed would be sharply multiplied when the device in question is part of a von Neuman, self-replicating automaton [vNSR]:

Fig. B: A concept sketch of the von Neuman self replicator [vNSR], in the form of a “clanking replicator”

____________________

10 –> Here, we see a machine that not only functions in its own behalf but has the ADDITIONAL — that is very important — capacity of self replication based on stored specifications, which requires:

(2) associated “metabolic” machines carrying out activities that as a part of their function, can provide required specific materials/parts and forms of energy for the replication facility, by using the generic resources in the surrounding environment.

11 –> Also, parts (ii), (iii) and (iv) are each necessary for and together are jointly sufficient to implement a self-replicating machine with an integral von Neumann universal constructor.

12 –> That is, we see here an irreducibly complex set of core components that must all be present in a properly organised fashion for a successful self-replicating machine to exist. [Take just one core part out, and self-replicating functionality ceases: the self-replicating machine is irreducibly complex (IC).]

13 –> This irreducible complexity is compounded by the requirement (i) for codes, requiring organised symbols and rules to specify both steps to take and formats for storing information, and (v) for appropriate material resources and energy sources.

14 –> Immediately, we are looking at islands of organised function for both the machinery and the information in the wider sea of possible (but mostly non-functional) configurations.

15 –> In short, outside such functionally specific — thus, isolated — information-rich hot (or, “target”) zones, want of correct components and/or of proper organisation and/or co-ordination will block function from emerging or being sustained across time from generation to generation.

16 –> So, we may conclude: once the set of possible configurations of relevant parts is large enough and the islands of function are credibly sufficiently specific/isolated, it is unreasonable to expect such function to arise from chance, or from chance circumstances driving blind natural forces under the known laws of nature.

17 –> As a relevant historical footnote, the much despised and derided William Paley actually saw much of this in his Natural Theology, ch 2, where he extended his analogy of the watch to the case of additional capacity to self-replicate:

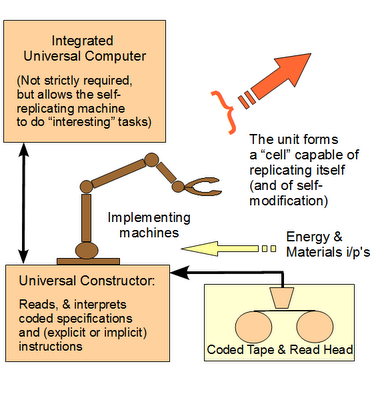

18 –> So far, the sub-argument has been on how FSCO/I, especially in a context of symbolic digital codes and algorithms, credibly points to design as its best explanation. But as Figs. C and D just below will show, our reasoning on the vNSR is directly relevant to the case of the living cell:

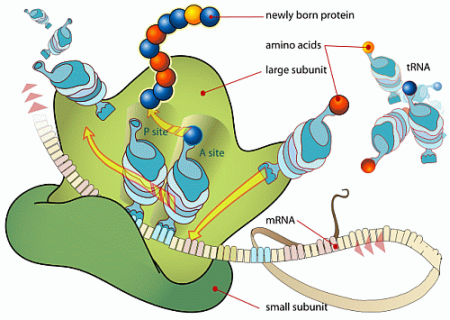

Fig. C: The protein synthesis process in the living cell, showing the source of messenger RNA, its transmission to the cytoplasm, and its use as a digital coded tape to produce proteins [Courtesy Wikimedia, under GNU. (Also, cf a medically oriented survey here.)]

Fig. D: A “close-up” of the Ribosome in action during protein translation, showing the 3-letter codons fitting tRNA anticodons in the A and P sites; with the tRNA’s serving as transporters of successive specified amino acids and as position-arm devices with tool-tips that “click” the successive amino acids [AA’s] into position until a stop codon triggers release. [Courtesy Wikimedia under GNU.]

Clay animation video [added Dec 5, 2011]:

[youtube OEJ0GWAoSYY]

More detailed animation [added Dec 5, 2011]:

[vimeo 31830891]

Fig D.1: Videos.

________________

19 –> Thus, we not only see the relevance of the vNSR to the living cell, but we see how the metabolic and self-replicating facilities of the living cell deeply embed codes, step-by step execution of instructions to achieve a functional product, and an astonishing incidence of FSCO/I. This justifies the inference on best, empirically based explanation, that the living cell is an artifact of design.

20 –> And, on our abundant experience and observation, the best explanation for a design is a designer. Such an inference on reliable sign to its signified, would still obtain simply on induction, whether or not the designer is in fact the possessor of that elusive property called free will. (Which is why Mr Arrington argued in the linked by laying this vexed issue to one side for the sake of moving his particular argument forward.)

However, the third part of the task still remains: why do design thinkers often hold that a designer is best understood as a self-moved, initiating agent cause?

[Continued here].