First, a happy thanksgiving.

Then, while digesting turkey etc, here is something to ponder.

One of the underlying issues surrounding the debates over the design inference is the question of responsible, rational freedom as a key facet of intelligent action, as opposed to blind chance and/or mechanical necessity. It has surfaced again, e.g. the WD400 thread.

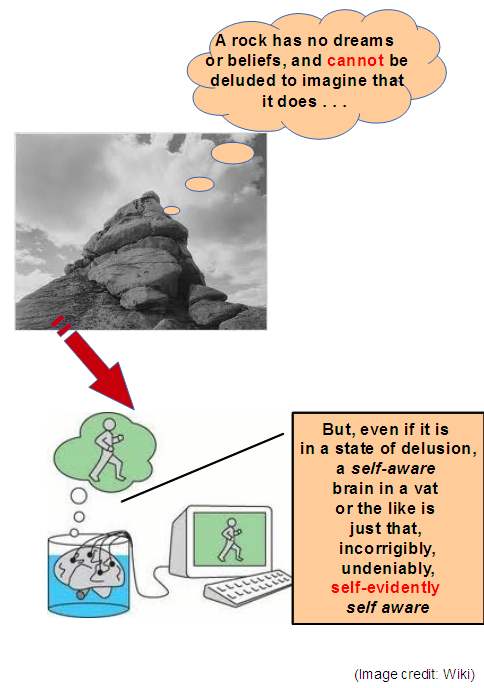

Some time back, this is part of how I posed the issue, emphasising the difference between self-aware responsible freedom and blindly mechanical causal chains used in computing:

Even if deluded about circumstances a self-aware being is just that, self-evidently, incorrigibly self-aware. And, a key facet of that self awareness is of responsible, rational freedom. Without which we cannot choose to follow and accept a rational case, we would just be mechanically grinding out our programming or and/or hard wiring.

Like, say, a full adder circuit:

Wire it right, designate the correct voltages as 1 and 0, and the outputs will add one bit with carry in and out. Indeed, more consistently correctly than we do.

Mis-wire, and it won’t, just as if the voltage-state assignments are wrong. But the circuits neither know nor care that they are performing arithmetic, they simply respond to inputs per the mechanical performance of the given circuits.

That is the context of my comment at 79 in the thread:

Z, 73:

mohammadnursyamsu: All current programs on computers work in a forced way, there is no freedom in it, the flexibility does not increase the freedom one bit.

[Z:] All you have done is introduce yet another term, “freedom”, which is not well-defined in this context.

Actually, not.

Absent responsible, rational freedom — exactly what a priori evolutionary materialist scientism cannot account for — you could not actually compose comment 73 above.

In short, freedom is always there once the mind is brought to bear, and without it we cannot be rationally creative.

And per observation, computation is a blind, mechanical cause effect process imposed on suitably organised substrates by mind. In fact, a fair summary of decision node based processing is that coded algorithms reduced to machine code act on suitably coded inputs and stored data by means of a carefully designed and developed . . . troubleshooting in a multi-fault environment required . . . physical machine, to generate desired outputs. At least, once debugging is sufficiently complete. (Which is itself an extremely complex, highly intuitive, non algorithmic procedure critically dependent on creative, responsible, rational freedom. [Where, this crucial aspect tends to get overlooked in discussions of finished product programs and processing.])

There really is a wizard behind the curtain.

Freedom, responsible rational freedom is not to be dismissed as a vague, unnecessary and suspect addition to the discussion, it is the basis on which we can at all think, ground and accept conclusions on their merits instead of being a glorified full adder circuit.

Where, of course, inserting decision nodes amounts to this: set up some operation, which throws an intermediate result, a test condition. In turn, that feeds a flag bit in a flag register. On one alternative, go to chain A of onward instructions, on the other go to chain B. And this can be set up as a loop.

First, the classic 6800 MPU as an example:

Let me add [Nov 28] a more elaborate diagram of a generalised microprocessor and its peripheral components, noting that an adder is a key component of an Arithmetic and Logic Unit, ALU . . . laying out the mechanisms and machinery that, properly organised, will execute algorithms:

Let me add [Nov 28] a more elaborate diagram of a generalised microprocessor and its peripheral components, noting that an adder is a key component of an Arithmetic and Logic Unit, ALU . . . laying out the mechanisms and machinery that, properly organised, will execute algorithms:

Next, the structured programming patterns that can implement any computing task:

It should be clear that no actual decisions are being made, only pre-programmed sequences of mechanical steps are taken based on the designer’s prior intent. (Of course one problem is that a computer will do exactly what it is programmed to, whether or not it makes sense.)

As a related point, trying to derive rational, contemplative self aware mindedness from computation is similar to trying to get North by heading due West.

Samuel Johnson, reportedly responding to the enthusiasm for mechanistic thinking in his day, is apt: All theory is against the freedom of the will; all experience for it. (Nor does this materially change if we inject chance processes, as such noise is no closer to being responsible and rational.)

If we are wise, we will go with the experience. END