One of the key diversions made by objectors to a design inference on empirically tested, reliable markers of design as causal factor, is to try to switch topics and debate about the designer.

Often, this then bleeds over into assertions or suggestions on “god of the gaps” fallacies and even accusations of ID being “Creationism in a cheap tuxedo” artificially constructed to try to evade a US Supreme Court ruling of 1987 on banning the teaching of Creationism in schools.

Okay, first, the series so far:

FYI-FTR: Part 4, What about Paley’s self-replicating watch thought exercise?

FYI-FTR: Part 5, on evolutionary materialism, can a designer even exist?

Back on topic, let’s start from EL’s typo-fixed form of a key comment:

EL: >>I think [the ID case] is undermined by [ETA lack of any] any evidence for the putative designer – no hypothesis about what the designer was trying to do, how she was doing it, what her capacities were, etc.>>

This, we can closely follow up with some clips from TSZ:

EL: >> My argument is simply that if you want to infer a designer as the cause of an apparent design, then you need to make some hypotheses about how, how, where and with what, otherwise you can’t subject your inference to any kind of test.>>

EL: >>Can you give me some arguments for the existence for a god of some sort?>>

AF/aka AS: >>ID is a dying movement. The whole point of the “Designer” routine was to circumvent the US separation of Church and State laws, at which it spectacularly failed. The solution is to remain vigilant as to the moves the fundamentalist right-wing authoritarian Christian cabal might try next to usurp democracy. And in parallel let’s maintain open and honest dialogue wherever possible.>>

Gregory:>>ID cannot be a ‘strictly scientific’ theory if it in *any* way deals with a capitalised ‘Intelligent Designer’ that cannot be studied. And it cannot avoid having to face ‘intelligent designers’ if it is really a theory of lowercase ‘intelligent design’. Which is why IDists religiously avoid the massive, credible, contemporary present and future-looking field of ‘design thinking’ that has nothing in common with its OoL ‘historical science’. Most ‘design theorists’ literally (I’ve witnessed it) laugh at IDism as much as anyone here at TSZ!>>

hotshoe:>>[Re, Mung;] “Most people I can think of would associate the fine-tuning argument with design. I’m surprised that you do not.”

[HS:] Well, I’m not “most people” and I don’t know your “most people” but I disagree with your association. That’s not what “most people” at UD mean when they claim there is a big-D Designer (although simultaneously they do believe the Designer is their christian god,who they also believe is the Fine Tuner). They are speaking specifically of the Designer of Life — secularists correctly understand their political motivation to sneak religious concepts of a “designer” into US public education — but they are right, conceptually, that it is possible to separate the two . . . >>

As fair comment at the outset, it is hard to escape the conclusion that this insistent stratagem (one, long maintained in the teeth of repeated, cogent correction) looks a lot like a red herring diversion, dragged away to a strawman caricature doused in motive-mongering ad hominems and set alight to cloud, confuse, poison and polarise the atmosphere for discussion. Complete, with grand conspiracy theorism about right-wing fundy political takeovers.

That is going to make it quite difficult to see and think clearly, but such makes clarity even more necessary.

A simple first step to clarity, is to acknowledge that design is, in essence, intelligently directed configuration, whether in intent or in representation or plan or model, or finally in action.

Which, already generically answers as to method: intelligent, knowledgeable and skilled purpose in action configures components to form a unified, functional whole that works based on the composition, arrangement, coupling and interaction of parts in a nodes-arcs framework, starting with say the mesh of a rifle scope:

Similarly, consider a gear:

. . . and a gear train:

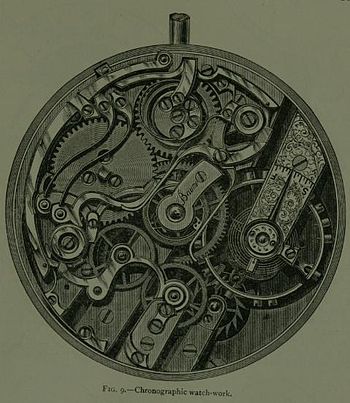

. . . such as appears in Paley’s example (recall, this includes the self-replicating watch thought exercise in Ch 2 of Nat Theol), the watch:

Particular design and development techniques may vary, as there is more than one way to skin a cat-fish. But, the general framework is obvious.

Wikipedia, testifying against known ideological inclinations, is helpful:

>>Design is the creation of a plan or convention for the construction of an object or a system (as in architectural blueprints, engineering drawings, business processes, circuit diagrams and sewing patterns).[1] Design has different connotations in different fields (see design disciplines below). In some cases the direct construction of an object (as in pottery, engineering, management, cowboy coding and graphic design) is also considered to be design.

More formally design has been defined as follows.

- (noun) a specification of an object, manifested by an agent, intended to accomplish goals, in a particular environment, using a set of primitive components, satisfying a set of requirements, subject to constraints;

- (verb, transitive) to create a design, in an environment (where the designer operates)[2]

Another definition for design is a roadmap or a strategic approach for someone to achieve a unique expectation. It defines the specifications, plans, parameters, costs, activities, processes and how and what to do within legal, political, social, environmental, safety and economic constraints in achieving that objective.[3]

Here, a “specification” can be manifested as either a plan or a finished product, and “primitives” are the elements from which the design object is composed . . . >>

Leading Intelligent Design theorist (and the capitalisation here only denotes that I am formally citing the name of the school of thought, Gregory et al), William Dembski summarises in one of his main works:

>>. . . (1) A designer conceives a purpose. (2) To accomplish that purpose, the designer forms a plan. (3) To execute the plan, the designer specifies building materials and assembly instructions. (4) Finally, the designer or some surrogate applies the assembly instructions to the building materials. (No Free Lunch, p. xi. HT: ENV.)>>

That is, it is a real, commonplace, well recognised process of causation that results in configured artifacts, ranging from:

(a) the s-t-r-i-n-g data structures in sentences such as this one to

(b) the case I continue to pose as iconic — in the teeth of “we don’t want to see this again” — an Abu 6500 C3 fishing reel (which reveals a typical nodes-arcs pattern of interacting components but is far simpler than a watch movement . . . and vastly more simple than a living cell):

. . . to something like:

(c) the process-flow network of an oil refinery:

As for the “intelligent” part, this is meant to emphasise the role of the designing agent in the process, as there is a tendency to use design in a sense that in context specifically excludes actual intelligently directed configuration. Where, patently, we exemplify designing intelligences, but cannot reasonably be held to exhaust the list of suitable candidate designers.

And where, too, we must insist that there is not only the contrast often used by objectors to design thought in science:

(a) natural vs supernatural

. . . but also, from the days of Plato in The Laws Bk X, c 360 BC (as has frequently been pointed out to objectors in and around UD):

(b) the natural [typ., tracing to blind chance and/or mechanical necessity] vs the ART-ificial [tracing, typ. to acts of design]

(To see the commonplace validity of this, simply look at the label of processed food packages in your kitchen, to see where natural and artificial ingredients, colourants etc are commonly distinguished. It is amazing to see how this point still has to be highlighted, after so many years.)

Now, too, several years ago, Dembski explained the basic point of the intelligent design project (and, Gregory et al, this is not capitalised, to denote that this is a generic project . . . ) as an exercise in inductively grounded, scientific research:

>>We know from experience that intelligent agents build intricate machines that need all their parts to function [–> i.e. he is specifically discussing “irreducibly complex” objects, structures or processes for which there is a core group of parts all of which must be present and properly arranged for the entity to function (cf. here, here and here)], things like mousetraps and motors. And we know how they do it — by looking to a future goal and then purposefully assembling a set of parts until they’re a working whole. Intelligent agents, in fact, are the one and only type of thing we have ever seen doing this sort of thing from scratch. In other words, our common experience provides positive evidence of only one kind of cause able to assemble such machines. It’s not electricity. It’s not magnetism. It’s not natural selection working on random variation. It’s not any purely mindless process. It’s intelligence . . . .

When we attribute intelligent design to complex biological machines that need all of their parts to work, we’re doing what historical scientists do generally. Think of it as a three-step process: (1) locate a type of cause active in the present that routinely produces the thing in question; (2) make a thorough search to determine if it is the only known cause of this type of thing; and (3) if it is, offer it as the best explanation for the thing in question.

[[William Dembski and Jonathan Witt, Intelligent Design Uncensored: An Easy-to-Understand Guide to the Controversy, pp. 20-21, 53 (InterVarsity Press, 2010). HT, CL of ENV & DI.]>>

Stephen Meyer, another leading design theorist (Gregory et al, ditto), similarly noted:

>>The central argument of my book [Signature in the Cell] is that intelligent design—the activity of a conscious and rational deliberative agent—best explains the origin of the information necessary to produce the first living cell. I argue this because of two things that we know from our uniform and repeated experience, which following Charles Darwin I take to be the basis of all scientific reasoning about the past. First, intelligent agents have demonstrated the capacity to produce large amounts of functionally specified information (especially in a digital form). Second, no undirected chemical process has demonstrated this power. Hence, intelligent design provides the best—most causally adequate—explanation for the origin of the information necessary to produce the first life from simpler non-living chemicals. In other words, intelligent design is the only explanation that cites a cause known to have the capacity to produce the key effect in question . . . . In order to [[scientifically refute this inductive conclusion] Falk would need to show that some undirected material cause has [[empirically] demonstrated the power to produce functional biological information apart from the guidance or activity a designing mind. Neither Falk, nor anyone working in origin-of-life biology, has succeeded in doing this . . . .

The central problem facing origin-of-life researchers is neither the synthesis of pre-biotic building blocks (which Sutherland’s work addresses) or even the synthesis of a self-replicating RNA molecule (the plausibility of which Joyce and Tracey’s work seeks to establish, albeit unsuccessfully . . . [[Meyer gives details in the linked page]). Instead, the fundamental problem is getting the chemical building blocks to arrange themselves into the large information-bearing molecules (whether DNA or RNA) . . . .

For nearly sixty years origin-of-life researchers have attempted to use pre-biotic simulation experiments to find a plausible pathway by which life might have arisen from simpler non-living chemicals, thereby providing support for chemical evolutionary theory. While these experiments have occasionally yielded interesting insights about the conditions under which certain reactions will or won’t produce the various small molecule constituents of larger bio-macromolecules, they have shed no light on how the information in these larger macromolecules (particularly in DNA and RNA) could have arisen. Nor should this be surprising in light of what we have long known about the chemical structure of DNA and RNA. As I show in Signature in the Cell, the chemical structures of DNA and RNA allow them to store information precisely because chemical affinities between their smaller molecular subunits do not determine the specific arrangements of the bases in the DNA and RNA molecules. Instead, the same type of chemical bond (an N-glycosidic bond) forms between the backbone and each one of the four bases, allowing any one of the bases to attach at any site along the backbone, in turn allowing an innumerable variety of different sequences. This chemical indeterminacy is precisely what permits DNA and RNA to function as information carriers. It also dooms attempts to account for the origin of the information—the precise sequencing of the bases—in these molecules as the result of deterministic chemical interactions . . . .

[[W]e now have a wealth of experience showing that what I call specified or functional information (especially if encoded in digital form) does not arise from purely physical or chemical antecedents [[–> i.e. by blind, undirected forces of chance and necessity]. Indeed, the ribozyme engineering and pre-biotic simulation experiments that Professor Falk commends to my attention actually lend additional inductive support to this generalization. On the other hand, we do know of a cause—a type of cause—that has demonstrated the power to produce functionally-specified information. That cause is intelligence or conscious rational deliberation. As the pioneering information theorist Henry Quastler once observed, “the creation of information is habitually associated with conscious activity.” And, of course, he was right. Whenever we find information—whether embedded in a radio signal, carved in a stone monument, written in a book or etched on a magnetic disc—and we trace it back to its source, invariably we come to mind, not merely a material process. Thus, the discovery of functionally specified, digitally encoded information along the spine of DNA, provides compelling positive evidence of the activity of a prior designing intelligence. This conclusion is not based upon what we don’t know. It is based upon what we do know from our uniform experience about the cause and effect structure of the world—specifically, what we know about what does, and does not, have the power to produce large amounts of specified information . . . .

[[In conclusion,] it needs to be noted that the [[now commonly asserted and imposed limiting rule on scientific knowledge, the] principle of methodological naturalism [[ that scientific explanations may only infer to “natural[[istic] causes”] is an arbitrary philosophical assumption, not a principle that can be established or justified by scientific observation itself. Others of us, having long ago seen the pattern in pre-biotic simulation experiments, to say nothing of the clear testimony of thousands of years of human experience, have decided to move on. We see in the information-rich structure of life a clear indicator of intelligent activity and have begun to investigate living systems accordingly. If, by Professor Falk’s definition, that makes us philosophers rather than scientists, then so be it. But I suspect that the shoe is now, instead, firmly on the other foot. [[Meyer, Stephen C: Response to Darrel Falk’s Review of Signature in the Cell, SITC web site, 2009. (Emphases and parentheses added.)]>>

The stunning contrast between the actual empirically grounded inference to best current scientific explanation being made and the toxically loaded, burning strawman caricatures could hardly be plainer.

The point is plain, that design often leaves highly characteristic signs or markers in designed objects, especially the sort of functionally specific, complex [often irreducibly so] organisation and associated information shown in the examples above, FSCO/I and IC for short. Where, in many cases we find string data structures bearing digital, coded and/or algorithmic, functionally specific information, dFSCI for short.

Where, we may also follow Dembski and discuss the wider generic pattern, specified complexity and/or complex specified information, CSI for short. Which, as he notes, is always cashed out functionally in the world of biological life.

In the biological world, we can start with protein synthesis, which is based on algorithmic codes:

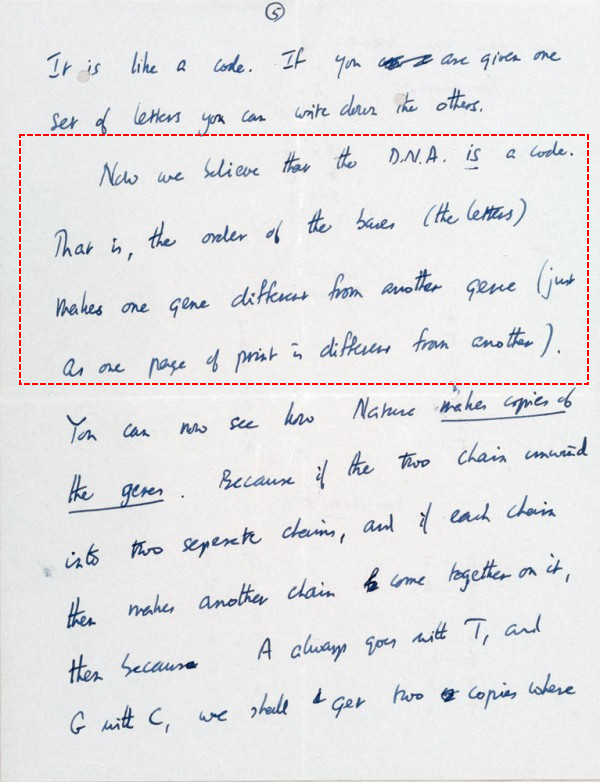

. . . noting, with Sir Francis Crick in his famous letter to his son on March 19, 1953:

We may then broaden our overview to the wider process-flow nodes and arcs metabolic network of the cell (noting that protein synthesis is the little bit in the top left corner just below):

Why do I keep pointing to nodes-arcs patterns and wiring/assembly diagrams?

Because, that is a key part of the bridge between information and functionally specific complex organisation, as that notorious IDiot — not! — J S Wicken noted in 1979 (as has of course been repeatedly highlighted but studiously ignored in insistence to play at abusive, toxically loaded burning strawman rhetorical games):

>>‘Organized’ systems are to be carefully distinguished from ‘ordered’ systems. Neither kind of system is ‘random,’ but whereas ordered systems are generated according to simple algorithms [[i.e. “simple” force laws acting on objects starting from arbitrary and common- place initial conditions] and therefore lack complexity, organized systems must be assembled element by element according to an [[originally . . . ] external ‘wiring diagram’ with a high information content . . . Organization, then, is functional complexity and carries information. It is non-random by design or by selection, rather than by the a priori necessity of crystallographic ‘order.’ [[“The Generation of Complexity in Evolution: A Thermodynamic and Information-Theoretical Discussion,” Journal of Theoretical Biology, 77 (April 1979): p. 353, of pp. 349-65. (Emphases and notes added. Nb: “originally” is added to highlight that for self-replicating systems, the blue print can be built-in.)]>>

Similarly, in 1973, that IDiot — not! — Leslie Orgel went on pivotal record:

>>. . . In brief, living organisms [–> note, functional context . . . ] are distinguished by their specified complexity. Crystals are usually taken as the prototypes of simple well-specified structures, because they consist of a very large number of identical molecules packed together in a uniform way. Lumps of granite or random mixtures of polymers are examples of structures that are complex but not specified. The crystals fail to qualify as living because they lack complexity; the mixtures of polymers fail to qualify because they lack specificity . . . .

These vague idea can be made more precise by introducing the idea of information. Roughly speaking, the information content of a structure is the minimum number of instructions needed to specify the structure. [–> this is of course equivalent to the string of yes/no questions required to specify the relevant “wiring diagram” for the set of functional states, T, in the much larger space of possible clumped or scattered configurations, W, as Dembski would go on to define in NFL in 2002, also cf here, here and here (with here on self-moved agents as designing causes).] One can see intuitively that many instructions are needed to specify a complex structure. [–> so if the q’s to be answered are Y/N, the chain length is an information measure that indicates complexity in bits . . . ] On the other hand a simple repeating structure can be specified in rather few instructions. [–> do once and repeat over and over in a loop . . . ] Complex but random structures, by definition, need hardly be specified at all . . . . Paley was right to emphasize the need for special explanations of the existence of objects with high information content, for they cannot be formed in nonevolutionary, inorganic processes. [The Origins of Life (John Wiley, 1973), p. 189, p. 190, p. 196. Of course, that immediately highlights OOL, where the required self-replicating entity is part of what has to be explained (cf. Paley here), a notorious conundrum for advocates of evolutionary materialism; one, that has led to mutual ruin documented by Shapiro and Orgel between metabolism first and genes first/RNA world schools of thought, cf here and note here. Behe would go on to point out that irreducibly complex structures are not credibly formed by incremental evolutionary processes and Menuge et al would bring up serious issues for the suggested exaptation alternative, cf. his challenges C1 – 5 in the just linked. Finally, Dembski highlights that CSI comes in deeply isolated islands T in much larger configuration spaces W, for biological systems functional islands. That puts up serious questions for origin of dozens of body plans reasonably requiring some 10 – 100+ mn bases of fresh genetic information to account for cell types, tissues, organs and multiple coherently integrated systems. Wicken’s remarks a few years later as already were cited now take on fuller force in light of the further points from Orgel at pp. 190 and 196 . . . ]>>

That is, we see here that FSCO/I imposes a needle in haystack search challenge that then becomes utterly implausible beyond a modest threshold of 500 – 1,000 structured y/n descriptive q chain length bits of FSCO/I:

This is direct relevant to OOL, where we see that cellular self replication is dependent on a von Neumann self replication [vNSR] facility, one that uses coded information and associated complex execution nanomachinery. Indeed, we may draw attention to Mignea’s recent presentation to highlight some of the relevant challenges:

Indeed, the underlying FSCO/I origin issue extends to origin of body plans [OOBP], once we see the issue of active information and challenges of hill climbing:

In short, there is good empirical and analytical reason to hold with Meyer, as a scientific, inductive inference backed up by the needle in haystack analysis:

>>intelligent design provides the best—most causally adequate—explanation for the origin of the information necessary to produce the first life from simpler non-living chemicals. In other words, intelligent design is the only explanation that cites a cause known to have the capacity to produce the key effect in question . . . . the discovery of functionally specified, digitally encoded information along the spine of DNA, provides compelling positive evidence of the activity of a prior designing intelligence. This conclusion is not based upon what we don’t know. It is based upon what we do know from our uniform experience about the cause and effect structure of the world—specifically, what we know about what does, and does not, have the power to produce large amounts of specified information . . .>>

Or, illustrating:

An apt summary by Dennis O’Neil:

>>The linear sequence of base pairs along the length of a DNA molecule is the genetic code for the assembly of particular amino acids to make specific types of proteins. Therefore, a gene is essentially a recipe consisting of a sequence of some of these base pairs. The sequence is usually not continuous but is in several different sections of a DNA molecule. Sections of the same gene can be assembled in different ways resulting in recipes for different kinds of proteins. This “alternative splicing” process is common, occurring in 92-94% of human genes.

Only 1.1-1.5% of the approximately 3 billion base pairs in human DNA actually code for proteins. These meaningful code sequences are called exons. The remaining 98+% of our DNA base pairs were in the past thought to consist merely of genetic junk, including meaningless code section duplications and ancient remnants of parasitic DNA that invaded our ancestors’ cells many millions of years ago. However, it is now becoming clear that much of this “junk” actually has important functions. These non-protein coding sections are referred to as introns. At least 80% of the intron regions of human DNA contain switches to turn the genes on and off. There are at least 4 million of these switches. Many of them are involved in monitoring and controlling cell functions throughout the body. Some also may be involved in a wide range of diseases including autoimmune responses (multiple sclerosis, lupis, rheumatoid arthritis, Crohn’s disease, and celiac disease).>>

This already directly implies that we have a code-oriented digitally coded communication system in the cell, fitting the generic framework:

. . . and using coded information such as we can see from the canonical genetic code in RNA form(where, some two dozen variants are known):

. . . much as has been illustrated by Hubert Yockey, based on the classic Shannon-Weaver model from 1948 on:

It can be fairly stated that the degree of functionally specific, irreducibly complex co-ordinated organisation and associated protocols and prescriptive information required to create such a system firmly place design on the table as a serious candidate explanation. Indeed, as the only known adequate cause. And this in a context where blind watchmaker needle in haystack search on blind chance and/or mechanical necessity becomes increasingly implausible.

Especially, where codes and algorithms using the codes are a clear manifestation of symbolisation, abstraction, purpose and frankly language.

When something like this is on the table, there is no need whatsoever to resort to grand conspiracy theorism. The issue is inference to best current explanation, and a priori ideological impositions as with Lewontin’s notorious example in the January 1997 NYRB review of Sagan’s last book does not properly answer the case (indeed, such puts a very different cast on the toxically loaded burning strawman rhetoric we have seen for years . . . much of it is obviously turnabout, projective “he hit back first” accusation by advocates of a priori evolutionary materialist scientism):

>> the problem is to get them [= hoi polloi] to reject irrational and supernatural explanations of the world, the demons that exist only in their imaginations, and to accept a social and intellectual apparatus, Science, as the only begetter of truth [–> NB: this is a knowledge claim about knowledge and its possible sources, i.e. it is a claim in philosophy not science; it is thus self-refuting]. . . .

It is not that the methods and institutions of science somehow compel us to accept a material explanation of the phenomenal world, but, on the contrary, that we are forced by our a priori adherence to material causes [–> another major begging of the question . . . ] to create an apparatus of investigation and a set of concepts that produce material explanations, no matter how counter-intuitive, no matter how mystifying to the uninitiated. Moreover, that materialism is absolute [–> i.e. here we see the fallacious, indoctrinated, ideological, closed mind . . . ], for we cannot allow a Divine Foot in the door. [From: “Billions and Billions of Demons,” NYRB, January 9, 1997. Bold emphasis and notes added. If you have been led to imagine that this is “quote mined” kindly read the linked fuller annotated cite, and also the several following cases. The kulturkampf is real.] >>

In short, we see here a direct implication, that there is an overwhelming impression of design in the natural world, and it is being institutionally suppressed by lab coat clad ideological imposition of a priori, evolutionary materialist scientism. No wonder, Philip Johnson classically replied in First Things, that November:

>>For scientific materialists the materialism comes first; the science comes thereafter. [Emphasis original] We might more accurately term them “materialists employing science.” [–> or, more colourfully, materialists dressed up in lab coats] And if materialism is true, then some materialistic theory of evolution has to be true simply as a matter of logical deduction, regardless of the evidence. That theory will necessarily be at least roughly like neo-Darwinism, in that it will have to involve some combination of random changes and law-like processes capable of producing complicated organisms that (in Dawkins’ words) “give the appearance of having been designed for a purpose.”

. . . . The debate about creation and evolution is not deadlocked . . . Biblical literalism is not the issue. The issue is whether materialism and rationality are the same thing. Darwinism is based on an a priori commitment to materialism, not on a philosophically neutral assessment of the evidence. Separate the philosophy from the science, and the proud tower collapses. [Emphasis added.] [The Unraveling of Scientific Materialism, First Things, 77 (Nov. 1997), pp. 22 – 25.]>>

But what about the designer (or, should that be: The Designer? . . . )?

It should be patently clear that an empirically grounded inference to design as relevant causal factor on tested reliable observed signs such as FSCO/I, is not to be equated to an inference to a designer, or to the nature of that designer; apart from, the common-sense point that contrivance requires a contriver and design a designer with adequate intelligence, skill, means and opportunity.

We routinely infer design from text, arson from well known signs, and burglary from its signs. In so doing, we distinguish act from actor, and recognise that once an actor is possible, it is reasonable to infer intelligently directed configuration as causal process on appropriate signs.

Police Departments then routinely proceed to try to identify a short list of serious candidates, generally termed suspects.

There is nothing inherently improper or underhanded in doing such.

When it comes to the world of life and especially OOL, as has been pointed out ever since Thaxton et al in the early 1980’s [as in, a book published in 1984 cannot properly be deemed an evasive stratagem to get around court rulings dating to 1987]; there is no necessary inference from what we see in the cell to anything more than, say, a molecular nanotech lab some generations beyond Venter et al. That has long been acknowledged on the record. Much the same can be said for OOBP.

Let me clip and headline here, too, IVV’s just put up list of interesting questions for advocates of evolutionary materialism and those who travel with them:

>>What is Evolution?

In short, there are significant issues on the table, and there is noneed to resort to grand conspiracy theorising if one wishes to fairly reflect on the merits of the case.

It is high time for toxically laced burning strawman arguments to be set aside.

Now, also, as seems to be increasingly recognised, there is a second domain of design thought in science, which is largely autonomous from debates over design in the world of life: evidence of cosmological design, with particular reference to fine tuning that enables C-chemistry, aqueous medium, terrestrial planet, cell based biological life.

A short, simplified video can help us get a quick overview:

[youtube UpIiIaC4kRA]

Let’s clip my UD ID Foundations Series, No. 6, to slightly elaborate:

>>While the rhetorical fireworks and worldview agendas-tinged culture clashes that so often crop up at UD and elsewhere have clustered on design inferences regarding the origin of life and the origin of body plans, modern design theory actually began with cosmological inferences to design on signs of highly specific, functionally complex organisation of the laws and circumstances of our observed cosmos that set it up at an operating point conducive to C-chemistry, cell based life.

Then agnostic British astrophysicist Sir Fred Hoyle (holder of a Nobel-equivalent prize) has pride of place:

From 1953 onward, Willy Fowler and I have always been intrigued by the remarkable relation of the 7.65 MeV energy level in the nucleus of 12 C to the 7.12 MeV level in 16 O. If you wanted to produce carbon and oxygen in roughly equal quantities by stellar nucleosynthesis, these are the two levels you would have to fix, and your fixing would have to be just where these levels are actually found to be. Another put-up job? . . . I am inclined to think so. A common sense interpretation of the facts suggests that a super intellect has “monkeyed” with the physics as well as the chemistry and biology, and there are no blind forces worth speaking about in nature.[F. Hoyle, Annual Review of Astronomy and Astrophysics, 20 (1982): 16. Emphasis added.]

Hoyle added:

I do not believe that any physicist who examined the evidence could fail to draw the inference that the laws of nuclear physics have been deliberately designed with regard to the consequences they produce within stars. [[“The Universe: Past and Present Reflections.” Engineering and Science, November, 1981. pp. 8–12]

Canadian astrophysicist (and Old Earth Creationist) Hugh Ross aptly explains:

As you tune your radio, there are certain frequencies where the circuit has just the right resonance and you lock onto a station. The internal structure of an atomic nucleus is something like that, with specific energy or resonance levels. If two nuclear fragments collide with a resulting energy that just matches a resonance level, they will tend to stick and form a stable nucleus. Behold! Cosmic alchemy will occur! In the carbon atom, the resonance just happens to match the combined energy of the beryllium atom and a colliding helium nucleus. Without it, there would be relatively few carbon atoms. Similarly, the internal details of the oxygen nucleus play a critical role. Oxygen can be formed by combining helium and carbon nuclei, but the corresponding resonance level in the oxygen nucleus is half a percent too low for the combination to stay together easily. Had the resonance level in the carbon been 4 percent lower, there would be essentially no carbon. Had that level in the oxygen been only half a percent higher, virtually all the carbon would have been converted to oxygen. Without that carbon abundance, neither you nor I would be here. [[Beyond the Cosmos (Colorado Springs, Colo.: NavPress Publishing Group, 1996), pg. 32. HT: IDEA.]

{ADDED, 13:02:24: Dr Guillermo Gonzalez surveys several fine tuning cases here, in a videotaped lecture. Let us add it . . . }

[youtube M39BKwtUAyA#!]

Why all the fuss about this?

It can be boiled down to one pivotal word that gives a slice of the cake with all the ingredients in it: water . . .

First, let us note that the three most common atoms in life are Carbon, Hydrogen and Oxygen. For instance, H and O make water, the three-atom universal solvent that is so adaptable to the needs of the living cell, and to making a terrestrial planet a good home for life.

As D. Halsmer, J. Asper, N. Roman, T. Todd observe of this wonder molecule:

The remarkable properties of water are numerous. Its very high specific heat maintains relatively stable temperatures both in oceans and organisms. As a liquid, its thermal conductivity is four times any other common liquid, which makes it possible for cells to efficiently distribute heat. On the other hand, ice has a low thermal conductivity, making it a good thermal shield in high latitudes. A latent heat of fusion only surpassed by that of ammonia tends to keep water in liquid form and creates a natural thermostat at 0°C. Likewise, the highest latent heat of vaporization of any substance – more than five times the energy required to heat the same amount of water from 0°C-100°C – allows water vapor to store large amounts of heat in the atmosphere. This very high latent heat of vaporization is also vital biologically because at body temperature or above, the only way for a person to dissipate heat is to sweat it off.

Water’s remarkable capabilities are definitely not only thermal. A high vapor tension allows air to hold more moisture, which enables precipitation. Water’s great surface tension is necessary for good capillary effect for tall plants, and it allows soil to hold more water. Water’s low viscosity makes it possible for blood to flow through small capillaries. A very well documented anomaly is that water expands into the solid state, which keeps ice on the surface of the oceans instead of accumulating on the ocean floor. Possibly the most important trait of water is its unrivaled solvency abilities, which allow it to transport great amounts of minerals to immobile organisms and also hold all of the contents of blood. It is also only mildly reactive, which keeps it from harmfully reacting as it dissolves substances. Recent research has revealed how water acts as an efficient lubricator in many biological systems from snails to human digestion. By itself, water is not very effective in this role, but it works well with certain additives, such as some glycoproteins. The sum of these traits makes water an ideal medium for life. Literally, every property of water is suited for supporting life. It is no wonder why liquid water is the first requirement in the search for extraterrestrial intelligence.

All these traits are contained in a simple molecule of only three atoms. One of the most difficult tasks for an engineer is to design for multiple criteria at once. … Satisfying all these criteria in one simple design is an engineering marvel. Also, the design process goes very deep since many characteristics would necessarily be changed if one were to alter fundamental physical properties such as the strong nuclear force or the size of the electron. [[“The Coherence of an Engineered World,” International Journal of Design & Nature and Ecodynamics, Vol. 4(1):47-65 (2009). HT: ENV.]

In short, the elegantly simple water molecule is set to a finely balanced, life-facilitating operating point, based on fundamental forces and parameters of the cosmos. Forces that had to be built in from the formation of the cosmos itself. Which fine-tuning from the outset, therefore strongly suggests a purpose to create life in the cosmos from its beginning.

Moreover, the authors also note how C, H and O just happen to be the fourth, first and third most abundant atoms in the cosmos, helium –the first noble gas — being number two. This — again on fundamental parameters and laws of our cosmos — does not suggest a mere accident of happy coincidence:

The explanation has to do with fusion within stars. Early [[stellar, nuclear fusion] reactions start with hydrogen atoms and then produce deuterium (mass 2), tritium (mass 3), and alpha particles (mass 4), but no stable mass 5 exists. This limits the creation of heavy elements and was considered one of “God’s mistakes” until further investigation. In actuality, the lack of a stable mass 5 necessitates bigger jumps of four which lead to carbon (mass 12) and oxygen (mass 16). Otherwise, the reactions would have climbed right up the periodic table in mass steps of one (until iron, which is the cutoff above which fusion requires energy rather than creating it). The process would have left oxygen and carbon no more abundant than any other element.

So, we can see why Sir Fred so pithily summed up a talk he gave at CalTech in 1981 as follows:

From 1953 onward, Willy Fowler and I have always been intrigued by the remarkable relation of the 7.65 MeV energy level in the nucleus of 12 C to the 7.12 MeV level in 16 O. If you wanted to produce carbon and oxygen in roughly equal quantities by stellar nucleosynthesis, these are the two levels you would have to fix, and your fixing would have to be just where these levels are actually found to be. Another put-up job? . . . I am inclined to think so. A common sense interpretation of the facts suggests that a super intellect has “monkeyed” with the physics as well as the chemistry and biology, and there are no blind forces worth speaking about in nature.[F. Hoyle, Annual Review of Astronomy and Astrophysics, 20 (1982): 16.Cited, Bradley, in “Is There Scientific Evidence for the Existence of God? How the Recent Discoveries Support a Designed Universe”. Emphasis added.]

{Added, 13:02:05: Earlier in the talk given at Caltech in 1981 or thereabouts, he elaborated on Carbon and the chemistry of life, especially enzymes:

The big problem in biology, as I see it, is to understand the origin of the information carried by the explicit structures of biomolecules. The issue isn’t so much the rather crude fact that a protein consists of a chain of amino acids linked together in a certain way, but that the explicit ordering of the amino acids endows the chain with remarkable properties, which other orderings wouldn’t give. The case of the enzymes is well known . . . If amino acids were linked at random, there would be a vast number of arrange-ments that would be useless in serving the pur-poses of a living cell. When you consider that a typical enzyme has a chain of perhaps 200 links and that there are 20 possibilities for each link,it’s easy to see that the number of useless arrangements is enormous, more than the number of atoms in all the galaxies visible in the largest telescopes. This is for one enzyme, and there are upwards of 2000 of them, mainly serving very different purposes. So how did the situation get to where we find it to be? This is, as I see it, the biological problem – the information problem . . . .

I was constantly plagued by the thought that the number of ways in which even a single enzyme could be wrongly constructed was greater than the number of all the atoms in the universe. So try as I would, I couldn’t convince myself that even the whole universe would be sufficient to find life by random processes – by what are called the blind forces of nature . . . . By far the simplest way to arrive at the correct sequences of amino acids in the enzymes would be by thought, not by random processes . . . .

Now imagine yourself as a superintellect working through possibilities in polymer chemistry. Would you not be astonished that polymers based on the carbon atom turned out in your calculations to have the remarkable properties of the enzymes and other biomolecules? Would you not be bowled over in surprise to find that a living cell was a feasible construct? Would you not say to yourself, in whatever language supercalculating intellects use: Some supercalculating intellect must have designed the properties of the carbon atom, otherwise the chance of my finding such an atom through the blind forces of nature would be utterly minuscule. Of course you would, and if you were a sensible superintellect you would conclude that the carbon atom is a fix.

No wonder, in that same talk, Hoyle — a lifelong agnostic — also added:

I do not believe that any physicist who examined the evidence could fail to draw the inference that the laws of nuclear physics have been deliberately designed with regard to the consequences they produce within stars. [[“The Universe: Past and Present Reflections.” Engineering and Science, November, 1981. pp. 8–12]}

The story does not end here.

As Robin Collins put the case in summary, in a classic essay on The Fine-tuning Design Argument(1998):

Suppose we went on a mission to Mars, and found a domed structure in which everything was set up just right for life to exist. The temperature, for example, was set around 70 °F and the humidity was at 50%; moreover, there was an oxygen recycling system, an energy gathering system, and a whole system for the production of food. Put simply, the domed structure appeared to be a fully functioning biosphere. What conclusion would we draw from finding this structure? Would we draw the conclusion that it just happened to form by chance? Certainly not. Instead, we would unanimously conclude that it was designed by some intelligent being. Why would we draw this conclusion? Because an intelligent designer appears to be the only plausible explanation for the existence of the structure. That is, the only alternative explanation we can think of–that the structure was formed by some natural process–seems extremely unlikely. Of course, it is possible that, for example, through some volcanic eruption various metals and other compounds could have formed, and then separated out in just the right way to produce the “biosphere,” but such a scenario strikes us as extraordinarily unlikely, thus making this alternative explanation unbelievable.The universe is analogous to such a “biosphere,” according to recent findings in physics . . . . Scientists call this extraordinary balancing of the parameters of physics and the initial conditions of the universe the “fine-tuning of the cosmos” . . . For example, theoretical physicist and popular science writer Paul Davies–whose early writings were not particularly sympathetic to theism–claims that with regard to basic structure of the universe, “the impression of design is overwhelming” (Davies, 1988, p. 203) . . .

[[Cf. also here and his video summary here. Short summary here. Elsewhere, Collins notes how noted cosmologist Roger Penrose has estimated that “[[i]in order to produce a universe resembling the one in which we live, the Creator would have to aim for an absurdly tiny volume of the phase space of possible universes — about 1/(10^(10^123)) of the entire volume . . .” That is, 1 divided by 10 followed by one less than 10^123 zeros. By a long shot, there are not enough atoms in the observed universe [~10^80] to fully write out the fraction.]Collins continues:

A few examples of this fine-tuning are listed below:

1. If the initial explosion of the big bang had differed in strength by as little as 1 part in 1060, the universe would have either quickly collapsed back on itself, or expanded too rapidly for stars to form. In either case, life would be impossible. [See Davies, 1982, pp. 90-91. (As John Jefferson Davis points out (p. 140), an accuracy of one part in 10^60 can be compared to firing a bullet at a one-inch target on the other side of the observable universe, twenty billion light years away, and hitting the target.)

2. Calculations indicate that if the strong nuclear force, the force that binds protons and neutrons together in an atom, had been stronger or weaker by as little as 5%, life would be impossible. (Leslie, 1989, pp. 4, 35; Barrow and Tipler, p. 322.)

3. Calculations by Brandon Carter show that if gravity had been stronger or weaker by 1 part in 10 to the 40th power, then life-sustaining stars like the sun could not exist. This would most likely make life impossible. (Davies, 1984, p. 242.)

4. If the neutron were not about 1.001 times the mass of the proton, all protons would have decayed into neutrons or all neutrons would have decayed into protons, and thus life would not be possible. (Leslie, 1989, pp. 39-40 )

5. If the electromagnetic force were slightly stronger or weaker, life would be impossible, for a variety of different reasons. (Leslie, 1988, p. 299.)>>

Nor, does the commonly seen objection, but all of this is solved by the multiverse (or the like), answer adequately:

>>John Leslie has somewhat to say about that metaphysical speculation (a multiverse is not at all anything we have observational evidence for, and on many multiverse models we never will, we are here dealing with philosophy in a lab coat, not science — raising the relevance of comparative difficulties analysis):

. . . the need for such explanations [[for fine-tuning] does not depend on any estimate of how many universes would be observer-permitting, out of the entire field of possible universes. Claiming that our universe is ‘fine tuned for observers’, we base our claim on how life’s evolution would apparently have been rendered utterly impossible by comparatively minor [[emphasis original] alterations in physical force strengths, elementary particle masses and so forth. There is no need for us to ask whether very great alterations in these affairs would have rendered it fully possible once more, let alone whether physical worlds conforming to very different laws could have been observer-permitting without being in any way fine tuned. Here it can be useful to think of a fly on a wall, surrounded by an empty region. A bullet hits the fly Two explanations suggest themselves. Perhaps many bullets are hitting the wall or perhaps a marksman fired the bullet. There is no need to ask whether distant areas of the wall, or other quite different walls, are covered with flies so that more or less any bullet striking there would have hit one. The important point is that the local area contains just the one fly.

[[Our Place in the Cosmos, 1998. The force of this point is deepened once we think about what has to be done to get a rifle into “tack-driving” condition. That is, a “tack-driving” rifle is a classic example of a finely tuned, complex system, i.e. we are back at the force of Collins’ point on a multiverse model needing a well adjusted Cosmos bakery. (Slide show, ppt. “Simple” summary, doc.)]

Leslie also adds a further point:

One striking thing about the fine tuning is that a force strength or a particle mass often appears to require accurate tuning for several reasons at once. Look at electromagnetism. Electromagnetism seems to require tuning for there to be any clear-cut distinction between matter and radiation; for stars to burn neither too fast nor too slowly for life’s requirements; for protons to be stable; for complex chemistry to be possible; for chemical changes not to be extremely sluggish; and for carbon synthesis inside stars (carbon being quite probably crucial to life). Universes all obeying the same fundamental laws could still differ in the strengths of their physical forces, as was explained earlier, and random variations in electromagnetism from universe to universe might then ensure that it took on any particular strength sooner or later. Yet how could they possibly account for the fact that the same one strength satisfied many potentially conflicting requirements, each of them a requirement for impressively accurate tuning? [Our Place in the Cosmos, 1998 (courtesy Wayback Machine) Emphases added.]>>

But, isn’t all of this, god of the gaps speculation — to be swept away by the steady advance of Science?

It will always be hard to get people to surrender favourite talking points, but no.

For, patently, inference to best explanation on what we do know — what design as a causal process is, and what it often leaves behind as a tested, reliable marker not easily accounted for on blind needle in haystack search [and no, it is not an answer in searching a large space, to suggest that random walks make a few small steps at a time] — is not appeal to ignorance but to our scientific, empirical knowledge base. One that keeps on multiplying the FSCO/I challenge to be addressed, not reducing it.

Similarly, it seems obvious that a reasonable person would agree that evidence of design as causal process should count as supporting the existence of a designer to account for that process, even where we may not have to hand further evidence that specifies any particular candidate.

Finally, on arguments to God, there are many that are readily accessible, and many find them cumulatively convincing. (I suggest Part 3 in this series, for starters.)

So, in sum there is a serious case to be faced in debates over design and signs of design in the natural world. Sufficiently so, that grand conspiracy theorising and linked rhetorical and agit-prop tactics should be set aside.

The better to actually address the matter on the merits. END

Some basic questions that need to be asked.